I understand convolution filters when applied to an image (e.g. an 224x224 image with 3 in-channels transformed by 56 total filters of 5x5 conv to a 224x224 image with 56 out-channels). The key is that there are 56 different filters each with 5x5x3 weights that end up producing output image 224x224, 56 (term after comma is output channels).

But I can't seem to understand how conv1d filter works in seq2seq models on a sequence of characters. One of the models i was looking at https://arxiv.org/pdf/1712.05884.pdf has a "post-net layer is comprised of 512 filters with shape 5×1" that operates on a spectrogram frame 80-d (means 80 different float values in the frame), and the result of filter is a 512-d frame.

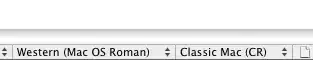

I don't understand what

in_channels, out_channelsmean in pytorch conv1d definition as in images I can easily understand what in-channels/out-channels mean, but for sequence of 80-float values frames I'm at loss. What do they mean in the context of seq2seq model like this above?How do 512, 5x1 filters on 80 float values produce 512 float values?**

Wouldn't a 5x1 filter when operating on 80 float values just produce 80 float values (by just taking 5 consecutive values at a time of those 80)? How many weights total these 512 filters have?**

The layer when printed in pytorch shows up as:

(conv): Conv1d(80, 512, kernel_size=(5,), stride=(1,), padding=(2,))

and the parametes in this layer show up as:

postnet.convolutions.0.0.conv.weight : 512x80x5 = 204800

- Shouldn't the weights in this layer instead be 512*5*1 as it only has 512 filters each of which is 5x1?