This is a question directly related to the answer provided here: MLR random forest multi label get feature importance

To summarize, the question is about producing a variable importance plot for a multi-label classification problem. I am coping the code provided by another person to produce the vimp plot:

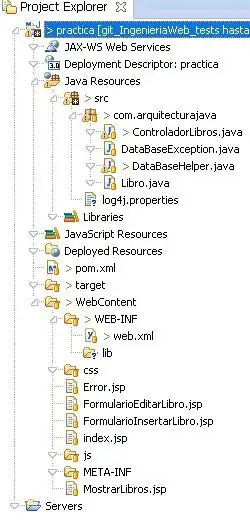

library(mlr)

yeast = getTaskData(yeast.task)

labels = colnames(yeast)[1:14]

yeast.task = makeMultilabelTask(id = "multi", data = yeast, target = labels)

lrn.rfsrc = makeLearner("multilabel.randomForestSRC")

mod2 = train(lrn.rfsrc, yeast.task)

vi =randomForestSRC::vimp(mod2$learner.model)

plot(vi,m.target ="label2")

I am not sure what TRUE, FALSE, and All in the randomForestSRC::vimp plot mean. I read the package documentation and still could not figure it out.

How does that distinction (TRUE, FALSE, All) work?