I have 15 features with a binary response variable and I am interested in predicting probabilities than 0 or 1 class labels. When I trained and tested the RF model with 500 trees, CV, balanced class weight, and balanced samples in the data frame, I achieved a good amount of accuracy and also good Brier score. As you can see in the image, the predicted probabilities values of class 1 on test data are in between 0 to 1.

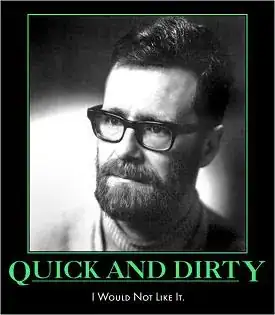

Here is the Histogram of predicted probabilities on test data:

with majority values at 0 - 0.2 and 0.9 to 1, which is much accurate. But when I try to predict the probability values for unseen data or let's say all data points for which value of 0 or 1 is unknown, the predicted probabilities values are between 0 to 0.5 only for class 1. Why is that so? Aren't the values should be from 0.5 to 1?

Here is the histogram of predicted probabilities on unseen data:

I am using sklearn RandomforestClassifier in python. The code is below:

#Read the CSV

df=pd.read_csv('path/df_all.csv')

#Change the type of the variable as needed

df=df.astype({'probabilities': 'int32', 'CPZ_CI_new.tif' : 'category'})

#Response variable is between 0 and 1 having actual probabilities values

y = df['probabilities']

# Separate majority and minority classes

df_majority = df[y == 0]

df_minority = df[y == 1]

# Upsample minority class

df_minority_upsampled = resample(df_minority,

replace=True, # sample with replacement

n_samples=100387, # to match majority class

random_state=42) # reproducible results

# Combine majority class with upsampled minority class

df1 = pd.concat([df_majority, df_minority_upsampled])

y = df1['probabilities']

X = df1.iloc[:,1:138]

#Change interfere values to category

y_01=y.astype('category')

#Split training and testing

X_train, X_valid, y_train, y_valid = train_test_split(X, y_01, test_size = 0.30, random_state = 42,stratify=y)

#Model

model=RandomForestClassifier(n_estimators = 500,

max_features= 'sqrt',

n_jobs = -1,

oob_score = True,

bootstrap = True,

random_state=0,class_weight='balanced',)

#I had 137 variable, to select the optimum one, I used RFECV

rfecv = RFECV(model, step=1, min_features_to_select=1, cv=10, scoring='neg_brier_score')

rfecv.fit(X_train, y_train)

#Retrained the model with only 15 variables selected

rf=RandomForestClassifier(n_estimators = 500,

max_features= 'sqrt',

n_jobs = -1,

oob_score = True,

bootstrap = True,

random_state=0,class_weight='balanced',)

#X1_train is same dataframe with but with only 15 varible

rf.fit(X1_train,y_train)

#Printed ROC metric

print('roc_auc_score_testing:', metrics.roc_auc_score(y_valid,rf.predict(X1_valid)))

#Predicted probabilties on test data

predv=rf.predict_proba(X1_valid)

predv = predv[:, 1]

print('brier_score_training:', metrics.brier_score_loss(y_train, predt))

print('brier_score_testing:', metrics.brier_score_loss(y_valid, predv))

#Output is,

roc_auc_score_testing: 0.9832652130944419

brier_score_training: 0.002380976369884945

brier_score_testing: 0.01669848089917487

#Later, I have images of that 15 variables, I created a data frame out(sample_img) of it and use the same function to predict probabilities.

IMG_pred=rf.predict_proba(sample_img)

IMG_pred=IMG_pred[:,1]