Character strings that look completely normal when printed to the RStudio console but appear as strange characters when written to csv and opened with excel.

Reproducible example

The following generates the object that appears as the string "a wit", then writes it to a csv:

# install.packages("dplyr")

library(dplyr)

serialized_char <- "580a000000030003060200030500000000055554462d380000001000000001000080090000000661c2a0776974"

(string <- serialized_char %>%

{substring(., seq(1, nchar(.), 2), seq(2, nchar(.), 2))} %>%

paste0("0x", .) %>%

as.integer %>%

as.raw %>%

unserialize())

[1] "a wit"

write.csv(string, "myfile.csv", row.names=F)

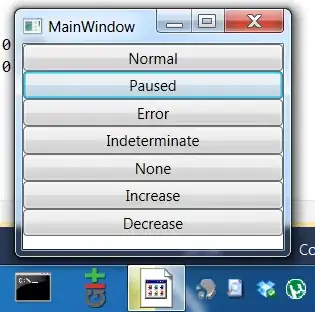

This is what it looks like when written from Mojave (and viewed in excel in OSX Mojave) - contains undesirable characters:

This is when it's written in High Sierra (and viewed in excel in High Sierra) - contains undesirable characters:

When is when written from Windows 10 and viewed in excel on windows 10 (looks good!):

This is when it is written from Mojave, but viewed in excel on Windows 10 - - still contains undesirable characters:

Question

I have a lot of character data of the form above (with characters that looks strange when written to csv and opened in excel) - how can these be cleaned in such a way that the text appears 'normally' in excel.

What I've tried

I have tried 4 things so far

write.csv(string, "myfile.csv", fileEncoding = 'UTF-8')

Encoding(string) <- "latin-1"

Encoding(string) <- "UTF-8"

iconv(string, "UTF-8", "latin1", sub=NA)