I am trying to figure out why my resident memory for one version of a program ("new") is much higher (5x) than another version of the same program ("baseline"). The program is running on a Linux cluster with E5-2698 v3 CPUs and written in C++. The baseline is a multiprocess program, and the new one is a multithreaded program; they are both fundamentally doing the same algorithm, computation, and operating on the same input data, etc. In both, there are as many processes or threads as cores (64), with threads pinned to CPUs. I've done a fair amount of heap profiling using both Valgrind Massif and Heaptrack, and they show that the memory allocation is the same (as it should be). The RSS for both the baseline and new version of the program are larger than the LLC.

The machine has 64 cores (hyperthreads). For both versions, I straced relevant processes and found some interesting results. Here's the strace command I used:

strace -k -p <pid> -e trace=mmap,munmap,brk

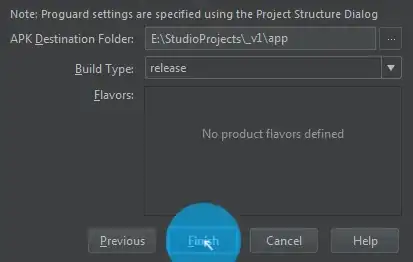

Here are some details about the two versions:

Baseline Version:

- 64 processes

- RES is around 13 MiB per process

- using hugepages (2MB)

- no malloc/free-related syscalls were made from the strace call listed above (more on this below)

New Version

- 2 processes

- 32 threads per process

- RES is around 2 GiB per process

- using hugepages (2MB)

- this version does a fair amount of

memcpycalls of large buffers (25MB) with default settings ofmemcpy(which, I think, is supposed to use non-temporal stores but I haven't verified this) - in release and profile builds, many

mmapandmunmapcalls were generated. Curiously, none were generated in debug mode. (more on that below).

top output (same columns as baseline)

Assuming I'm reading this right, the new version has 5x higher RSS in aggregate (entire node) and significantly more page faults as measured using perf stat when compared to the baseline version. When I run perf record/report on the page-faults event, it's showing that all of the page faults are coming from a memset in the program. However, the baseline version has that memset as well and there are no pagefaults due to it (as verified using perf record -e page-faults). One idea is that there's some other memory pressure for some reason that's causing the memset to page-fault.

So, my question is how can I understand where this large increase in resident memory is coming from? Are there performance monitor counters (i.e., perf events) that can help shed light on this? Or, is there a heaptrack- or massif-like tool that will allow me to see what is the actual data making up the RES footprint?

One of the most interesting things I noticed while poking around is the inconsistency of the mmap and munmap calls as mentioned above. The baseline version didn't generate any of those; the profile and release builds (basically, -march=native and -O3) of the new version DID issue those syscalls but the debug build of the new version DID NOT make calls to mmap and munmap (over tens of seconds of stracing). Note that the application is basically mallocing an array, doing compute, and then freeing that array -- all in an outer loop that runs many times.

It might seem that the allocator is able to easily reuse the allocated buffer from the previous outer loop iteration in some cases but not others -- although I don't understand how these things work nor how to influence them. I believe allocators have a notion of a time window after which application memory is returned to the OS. One guess is that in the optimized code (release builds), vectorized instructions are used for the computation and it makes it much faster. That may change the timing of the program such that the memory is returned to the OS; although I don't see why this isn't happening in the baseline. Maybe the threading is influencing this?

(As a shot-in-the-dark comment, I'll also say that I tried the jemalloc allocator, both with default settings as well as changing them, and I got a 30% slowdown with the new version but no change on the baseline when using jemalloc. I was a bit surprised here as my previous experience with jemalloc was that it tends to produce some some speedup with multithreaded programs. I'm adding this comment in case it triggers some other thoughts.)