I am using Azure Data Factory V2 to transfer some csv files from Azure Data Lake to Azure Synapse

I have a loop to find all files in special folder on my DataLake.

After i have a DataFlow to transfer data from staging to main table.

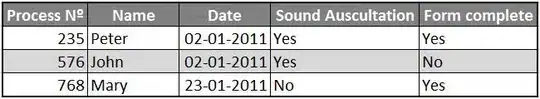

In my for-each loop, at first i am cleaning my staging table by a SP then I am reading data from csv file (one by one). to transfer data from CVS to my staging table i am using Copy Data task. I am reading all columns as varchar and all columns in staging table are varchar(there is no casting here)

Each file has about 20 columns and about 216 rows.

I am wondering why for just three files my pipeline takes so much times?

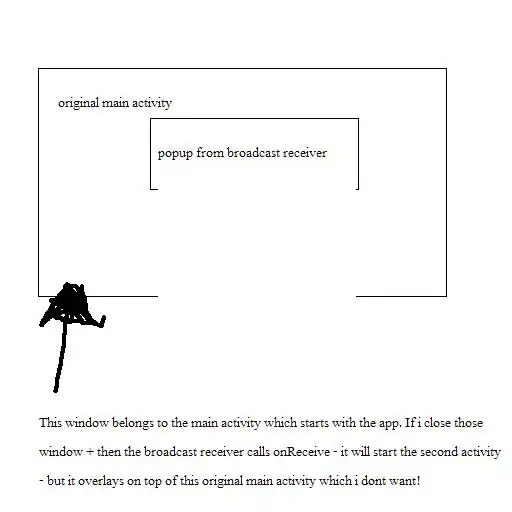

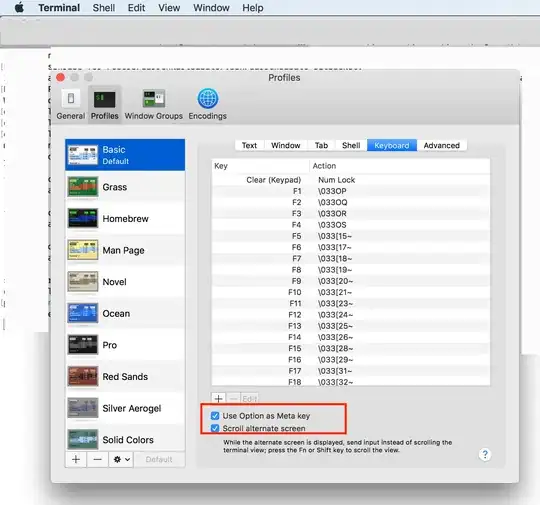

this is my task for cleaning staging table.

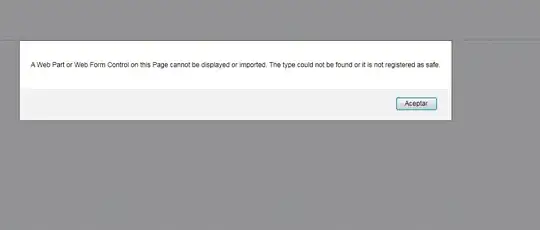

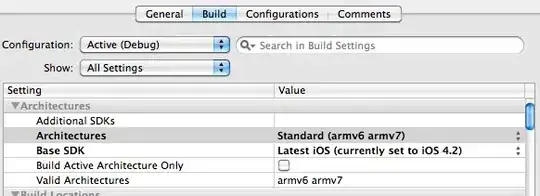

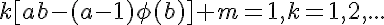

This is my SQL server scale and usage.

I ran my pipeline exactly after resuming my Synapse service. that is only pipeline and service that work with my synapse.

This is my stored procedure :

CREATE PROCEDURE [stg].[...._Truncate]

AS

TRUNCATE TABLE [stg].[....e]

GO

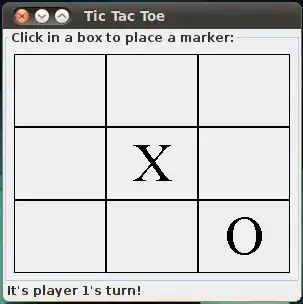

This is my DF

SELECT

Convert(int,S.[MMSI]) AS [MMSI] ,

Convert(int,S.[IMO] ) AS [IMO] ,

Convert(int,S.[SHIP_ID] )AS [SHIP_ID] ,

Convert(numeric(8, 5),S.[LAT] ) AS [LAT] ,

Convert(numeric(8, 5),S.[LON] ) AS [LON] ,

Convert(int,S.[SPEED] ) AS [SPEED] ,

Convert(int,S.[HEADING] ) AS [HEADING] ,

Convert(int,S.[COURSE] ) AS [COURSE] ,

Convert(int,S.[STATUS] ) AS [STATUS] ,

Convert(datetime,S.[TIMESTAMP] ) AS [TIMESTAMP] ,

Convert(varchar(5),S.[DSRC] ) AS [DSRC] ,

Convert(int,S.[UTC_SECONDS] ) AS [UTC_SECONDS] ,

'M....._Simple' AS [ETL_CREATED_BY],

GETUTCDATE() AS [ETL_CREATED_DATE],

CONVERT(BIGINT, replace(CONVERT(VARCHAR, GETDATE(), 112), '/', '') + replace(CONVERT(VARCHAR, GETDATE(), 108), ':', '')) AS [ETL_PROCESS_ID]

FROM [stg].[....e] AS s

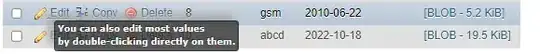

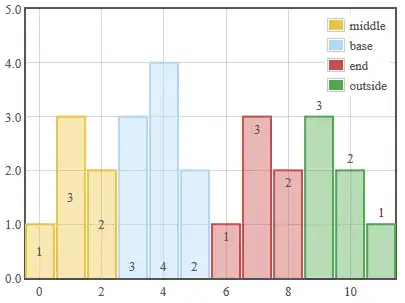

This is my Derived columns

This is ending mapping in my data flow

Should I do somethings here ?