I wrote an application (Springboot + Data JPA + Data Rest) that keeps throwing OutOfMemoryException at me when the application loads. I can skip that code that runs on application start but then the exception may happen later down the road. It's probably best to show you what happens on application start because it's actually super simple and should not cause any problems imho:

@SpringBootApplication

@EnableAsync

@EnableJpaAuditing

public class ScraperApplication {

public static void main(String[] args) {

SpringApplication.run(ScraperApplication.class, args);

}

}

@Component

@RequiredArgsConstructor(onConstructor = @__(@Autowired))

public class DefaultDataLoader {

private final @NonNull LuceneService luceneService;

@Transactional

@EventListener(ApplicationReadyEvent.class)

public void load() {

luceneService.reindexData();

}

}

@Service

@RequiredArgsConstructor(onConstructor = @__(@Autowired))

public class LuceneService {

private static final Log LOG = LogFactory.getLog(LuceneService.class);

private final @NonNull TrainingRepo trainingRepo;

private final @NonNull EntityManager entityManager;

public void reindexData() {

LOG.info("Reindexing triggered");

FullTextEntityManager fullTextEntityManager = Search.getFullTextEntityManager(entityManager);

fullTextEntityManager.purgeAll(Training.class);

LOG.info("Index purged");

int page = 0;

int size = 100;

boolean morePages = true;

Page<Training> pageData;

while (morePages) {

pageData = trainingRepo.findAll(PageRequest.of(page, size));

LOG.info("Loading page " + (page + 1) + "/" + pageData.getTotalPages());

pageData.getContent().stream().forEach(t -> fullTextEntityManager.index(t));

fullTextEntityManager.flushToIndexes(); // flush regularly to keep memory footprint low

morePages = pageData.getTotalPages() > ++page;

}

fullTextEntityManager.flushToIndexes();

LOG.info("Index flushed");

}

}

You can see what I am doing is clear out the index, read all Trainings from the TrainingRepo in a paged way (100 at a time) and write them into the index. Not much going on actually. A few minutes after the "Index purged" message I get this - and only this:

java.lang.OutOfMemoryError: Java heap space

In the logs I get to see "Index purged" but never see any "Loading page ..." message, so it must be stuck on the findAll() call.

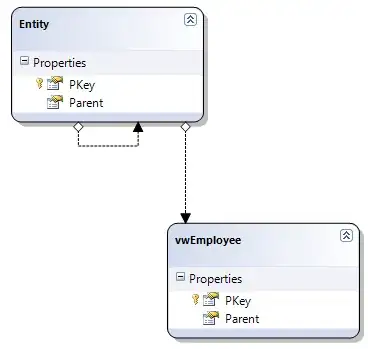

I had the JVM write a heap dump and loaded it into Eclipse Memory Analyzer and got a full stack trace: https://gist.github.com/mathias-ewald/2fddb9762427374bb04d332bd0b6b499

I also looked around the report a bit, but I need help interpreting this information which is why I attached some screenshots from Eclipse Memory Analyzer.

EDIT:

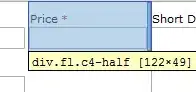

I just enabled "show-sql" and saw this before everything hung:

Hibernate: select training0_.id as id1_9_, training0_.created_date as created_2_9_, training0_.description as descript3_9_, training0_.duration_days as duration4_9_, training0_.execution_id as executi14_9_, training0_.level as level5_9_, training0_.modified_date as modified6_9_, training0_.name as name7_9_, training0_.price as price8_9_, training0_.product as product9_9_, training0_.quality as quality10_9_, training0_.raw as raw11_9_, training0_.url as url12_9_, training0_.vendor as vendor13_9_ from training training0_ where not (exists (select 1 from training training1_ where training0_.url=training1_.url and training0_.created_date<training1_.created_date)) limit ?

Hibernate: select execution0_.id as id1_1_0_, execution0_.created_date as created_2_1_0_, execution0_.duration_millis as duration3_1_0_, execution0_.message as message4_1_0_, execution0_.modified_date as modified5_1_0_, execution0_.scraper as scraper6_1_0_, execution0_.stats_id as stats_id8_1_0_, execution0_.status as status7_1_0_, properties1_.execution_id as executio1_2_1_, properties1_.properties as properti2_2_1_, properties1_.properties_key as properti3_1_, stats2_.id as id1_5_2_, stats2_.avg_quality as avg_qual2_5_2_, stats2_.max_quality as max_qual3_5_2_, stats2_.min_quality as min_qual4_5_2_, stats2_.null_products as null_pro5_5_2_, stats2_.null_vendors as null_ven6_5_2_, stats2_.products as products7_5_2_, stats2_.tags as tags8_5_2_, stats2_.trainings as training9_5_2_, stats2_.vendors as vendors10_5_2_, producthis3_.stats_id as stats_id1_6_3_, producthis3_.product_histogram as product_2_6_3_, producthis3_.product_histogram_key as product_3_3_, taghistogr4_.stats_id as stats_id1_7_4_, taghistogr4_.tag_histogram as tag_hist2_7_4_, taghistogr4_.tag_histogram_key as tag_hist3_4_, vendorhist5_.stats_id as stats_id1_8_5_, vendorhist5_.vendor_histogram as vendor_h2_8_5_, vendorhist5_.vendor_histogram_key as vendor_h3_5_ from execution execution0_ left outer join execution_properties properties1_ on execution0_.id=properties1_.execution_id left outer join stats stats2_ on execution0_.stats_id=stats2_.id left outer join stats_product_histogram producthis3_ on stats2_.id=producthis3_.stats_id left outer join stats_tag_histogram taghistogr4_ on stats2_.id=taghistogr4_.stats_id left outer join stats_vendor_histogram vendorhist5_ on stats2_.id=vendorhist5_.stats_id where execution0_.id=?

Apparently, it creates the statement to fetch all the Training entities but the Execution statement is the last it manages to execute.

I changed the relation from Training to Execution from @ManyToOne to @ManyToOne(fetch = FetchType.LAZY) and suddenly I the code was able to load data into the index again. So I am thinking something might be wrong with my Execution entity mapping. Let me share the code with you:

@Entity

@Data

@EntityListeners(AuditingEntityListener.class)

public class Execution {

public enum Status { SCHEDULED, RUNNING, SUCCESS, FAILURE };

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

@ToString.Include

private Long id;

@Column(updatable = false)

private String scraper;

@CreatedDate

private LocalDateTime createdDate;

@LastModifiedDate

private LocalDateTime modifiedDate;

@Min(0)

@JsonProperty(access = Access.READ_ONLY)

private Long durationMillis;

@ElementCollection(fetch = FetchType.EAGER)

private Map<String, String> properties;

@NotNull

@Enumerated(EnumType.STRING)

private Status status;

@Column(length = 9999999)

private String message;

@EqualsAndHashCode.Exclude

@OneToOne(cascade = CascadeType.ALL)

private Stats stats;

}

And since it is a relation of Execution, here's the Stats entity, too:

@Entity

@Data

public class Stats {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

@ToString.Include

private Long id;

private Long trainings;

private Long vendors;

private Long products;

private Long tags;

private Long nullVendors;

private Long nullProducts;

private Double minQuality;

private Double avgQuality;

private Double maxQuality;

@ElementCollection(fetch = FetchType.EAGER)

private Map<String, Long> vendorHistogram;

@ElementCollection(fetch = FetchType.EAGER)

private Map<String, Long> productHistogram;

@ElementCollection(fetch = FetchType.EAGER)

private Map<String, Long> tagHistogram;

}