I'd like to use logs severity with the Google Cloud Logging agent and a linux (Debian) VM running on Compute Engine.

The Compute Engine instance is a debian-9 n2-standard-4 machine.

I've installed the Cloud Logging agent by following the documentation.

$ curl -sSO https://dl.google.com/cloudagents/add-logging-agent-repo.sh

$ sudo bash add-logging-agent-repo.sh

$ sudo apt-get install google-fluentd

$ sudo apt-get install -y google-fluentd-catch-all-config-structured

$ sudo service google-fluentd start

And according to this paragraph, we can use log severity if the log line is a serialized JSON object and if the option detect_json is set to true.

So I log something like below, but unfortunately I don't have any severity in GCP.

$ logger '{"severity":"ERROR","message":"This is an error"}'

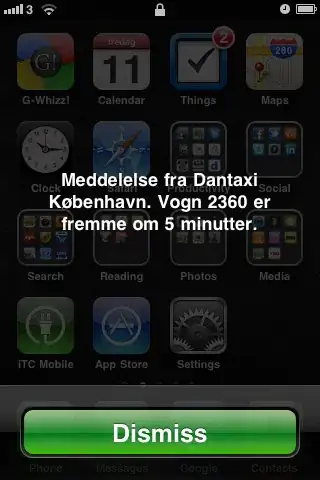

But I would expect something like this:

I don't mind the type of the log entry being textPayload or jsonPayload.

The file /etc/google-fluentd/google-fluentd.conf with detect_json enable:

$ cat /etc/google-fluentd/google-fluentd.conf

# Master configuration file for google-fluentd

# Include any configuration files in the config.d directory.

#

# An example "catch-all" configuration can be found at

# https://github.com/GoogleCloudPlatform/fluentd-catch-all-config

@include config.d/*.conf

# Prometheus monitoring.

<source>

@type prometheus

port 24231

</source>

<source>

@type prometheus_monitor

</source>

# Do not collect fluentd's own logs to avoid infinite loops.

<match fluent.**>

@type null

</match>

# Add a unique insertId to each log entry that doesn't already have it.

# This helps guarantee the order and prevent log duplication.

<filter **>

@type add_insert_ids

</filter>

# Configure all sources to output to Google Cloud Logging

<match **>

@type google_cloud

buffer_type file

buffer_path /var/log/google-fluentd/buffers

# Set the chunk limit conservatively to avoid exceeding the recommended

# chunk size of 5MB per write request.

buffer_chunk_limit 512KB

# Flush logs every 5 seconds, even if the buffer is not full.

flush_interval 5s

# Enforce some limit on the number of retries.

disable_retry_limit false

# After 3 retries, a given chunk will be discarded.

retry_limit 3

# Wait 10 seconds before the first retry. The wait interval will be doubled on

# each following retry (20s, 40s...) until it hits the retry limit.

retry_wait 10

# Never wait longer than 5 minutes between retries. If the wait interval

# reaches this limit, the exponentiation stops.

# Given the default config, this limit should never be reached, but if

# retry_limit and retry_wait are customized, this limit might take effect.

max_retry_wait 300

# Use multiple threads for processing.

num_threads 8

# Use the gRPC transport.

use_grpc true

# If a request is a mix of valid log entries and invalid ones, ingest the

# valid ones and drop the invalid ones instead of dropping everything.

partial_success true

# Enable monitoring via Prometheus integration.

enable_monitoring true

monitoring_type opencensus

detect_json true

</match>

The file /etc/google-fluentd/config.d/syslog.conf:

$ cat /etc/google-fluentd/config.d/syslog.conf

<source>

@type tail

# Parse the timestamp, but still collect the entire line as 'message'

format syslog

path /var/log/syslog

pos_file /var/lib/google-fluentd/pos/syslog.pos

read_from_head true

tag syslog

</source>

What am I missing ?

Note: I am aware of the glcoud workaround, but it is not ideal since it logs everything under the resource type 'Global', not in my VM.