I'm getting cuda out of memory error. The error is shown below

RuntimeError: CUDA out of memory. Tried to allocate 256.00 MiB (GPU 0; 23.65 GiB total capacity; 21.65 GiB already allocated; 242.88 MiB free; 22.55 GiB reserved in total by PyTorch)

I'm able to train the model after I reduce the batch_size. When I checked the output of nvidia-smi, I see a 40% of memory is still free. Here is the output.

What could be possible reason for this?

pytorch: 1.4

cuda: 10.2

input_size: (512, 512, 4)

using half-precision

More information:

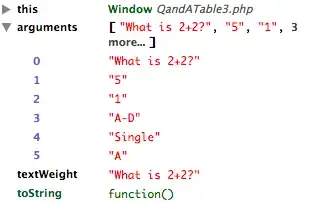

The plot of gpu-utilization is shown below

The numbers at each peak represent the batch_size. It seems the initial memory requirement is much higher than memory needed afterward. Can someone explain?