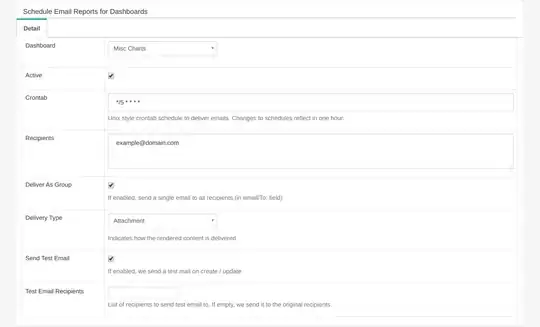

I am running Superset via Docker. I enabled the Email Report feature and tried it:

However, I only receive the test email report. I don't receive any emails after.

This is my CeleryConfig in superset_config.py:

class CeleryConfig(object):

BROKER_URL = 'sqla+postgresql://superset:superset@db:5432/superset'

CELERY_IMPORTS = (

'superset.sql_lab',

'superset.tasks',

)

CELERY_RESULT_BACKEND = 'db+postgresql://superset:superset@db:5432/superset'

CELERYD_LOG_LEVEL = 'DEBUG'

CELERYD_PREFETCH_MULTIPLIER = 10

CELERY_ACKS_LATE = True

CELERY_ANNOTATIONS = {

'sql_lab.get_sql_results': {

'rate_limit': '100/s',

},

'email_reports.send': {

'rate_limit': '1/s',

'time_limit': 120,

'soft_time_limit': 150,

'ignore_result': True,

},

}

CELERYBEAT_SCHEDULE = {

'email_reports.schedule_hourly': {

'task': 'email_reports.schedule_hourly',

'schedule': crontab(minute=1, hour='*'),

},

}

The documentation says I need to run the celery worker and beat.

celery worker --app=superset.tasks.celery_app:app --pool=prefork -O fair -c 4

celery beat --app=superset.tasks.celery_app:app

I added them to the 'docker-compose.yml':

superset-worker:

build: *superset-build

command: >

sh -c "celery worker --app=superset.tasks.celery_app:app -Ofair -f /app/celery_worker.log &&

celery beat --app=superset.tasks.celery_app:app -f /app/celery_beat.log"

env_file: docker/.env

restart: unless-stopped

depends_on: *superset-depends-on

volumes: *superset-volumes

Celery Worker is indeed working when sending the first email. The log file is also visible. However, the celery beat seems to not be functioning. There is also no 'celery_beat.log' created.

If you'd like a deeper insight, here's the commit with the full implementation of the functionality.

How do I correctly configure celery beat? How can I debug this?