Try to follow the instruction on internet to achieve kafka asynchronous produce. Here is what my producer looks like:

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord;

public void asynSend(String topic, Integer partition, String message) {

ProducerRecord<Object, Object> data = new ProducerRecord<>(topic, partition,null, message);

producer.send(data, new DefaultProducerCallback());

}

private static class DefaultProducerCallback implements Callback {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e != null) {

logger.error("Asynchronous produce failed");

}

}

}

And I produce in a for loop like this:

for (int i = 0; i < 5000; i++) {

int partition = i % 2;

FsProducerFactory.getInstance().asynSend(topic, partition,i + "th message to partition " + partition);

}

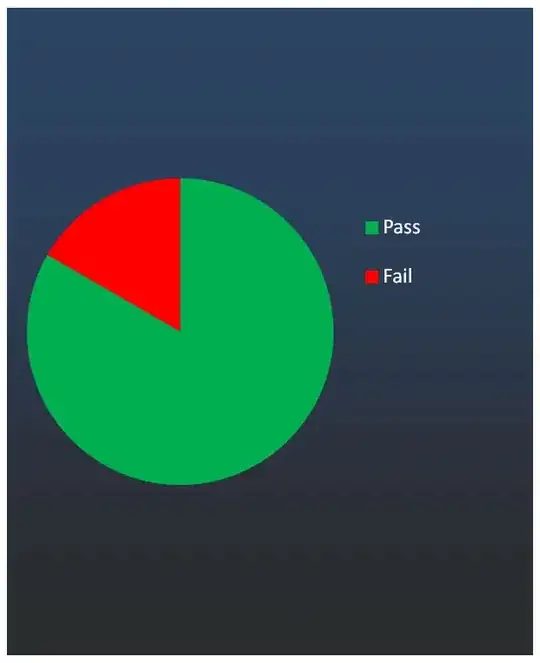

However, some message may get lost. As shown below, message from 4508 to 4999 not delivered.

I find the reason might be the shutdown of producer process and all message in cache not send at that time would be lost. Add this line after for loop would solve this problem:

producer.flush();

However, I am not sure whether it is a charm solution because I notice someone mentioned that flush would make Asynchronous send somehow Synchronous, can anyone explain or help me improve it?