I've been using LabelEncoder for categorical output

from keras.utils import np_utils

from sklearn.preprocessing import LabelEncoder

label = LabelEncoder()

y_train = np_utils.to_categorical(label.fit_transform(y_train))

y_test = np_utils.to_categorical(label.fit_transform(y_test))

and there are 4 classes in output,

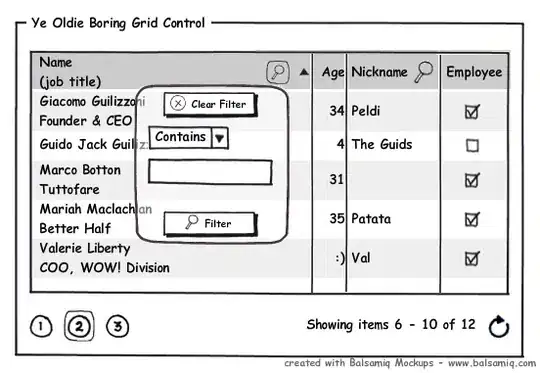

after encoding, the labels look like this

And model looks like this:

model0 = Sequential()

model0.add(Conv1D(32, kernel_size=(3), input_shape=input_shape))

model0.add(Conv1D(64, kernel_size=(3)))

model0.add(Conv1D(128, kernel_size=(3)))

model0.add(Conv1D(64, kernel_size=(3)))

model0.add(Conv1D(32, kernel_size=(3)))

model0.add(Flatten())

model0.add(Dense(128, activation='relu'))

model0.add(Dense(12, activation='relu'))

model0.add(Dense(4, activation='softmax'))

model0.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

After training, while predicting how to get the output as one of the classes? I tried using:

model0.predict_classes(feature.reshape(1,num_features,1))

array([3], dtype=int64)

How can I know to which class the output belongs?