I have been working on Multitask model, using VGG16 with no dropout layers. I find out that the validation accuracy is higher than the training accuracy and validation loss is lesser than the training loss.

I cant seem to findout the reason to why is this happening in the model.

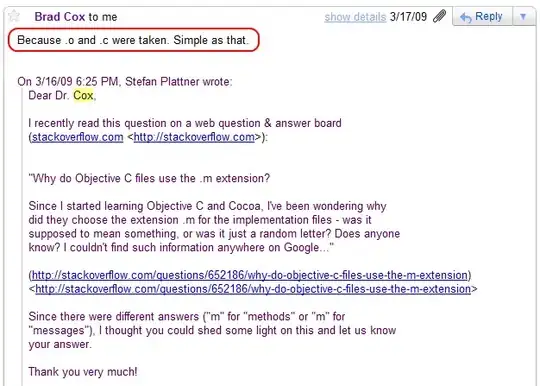

Below is the training plot:

Data:

I am using (randomly shuffled images) 70% train, 15% validation, 15% test, and the results on 15% test data is as follows:

Do you think these results are too good to be true?