I am using ConfusionMatrixDisplay from sklearn library to plot a confusion matrix on two lists I have and while the results are all correct, there is a detail that bothers me. The color's density in the confusion matrix seem to match the number of instances rather than the accuracy of the classification.

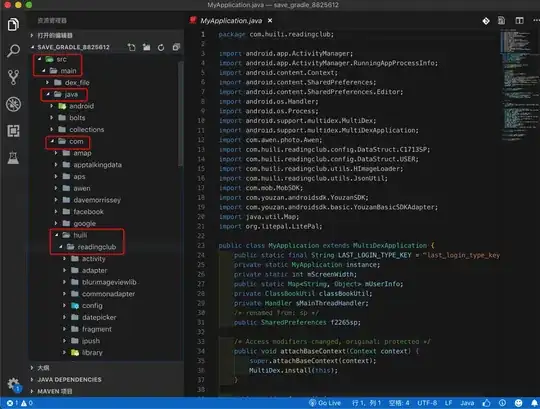

This is the code I am using to plot the confusion matrix:

target_names = ['Empty', 'Human', 'Dog', 'Dog&Human']

labels_names = [0,1,2,3]

print(classification_report(y_true, y_pred,labels=labels_names, target_names=target_names))

cm = confusion_matrix(y_true, y_pred,labels=labels_names)

disp = ConfusionMatrixDisplay(confusion_matrix=cm,display_labels=target_names)

disp = disp.plot(cmap=plt.cm.Blues,values_format='g')

plt.show()

Now the results I get from both the report and the confusion matrix are:

As you can see, both the classes "Dog" and "Dog&Human" achieved a precision 1, but the color of the class "Dog" is the only one with a dense blue. Even the class "Empty" which has some mis-classified instances has a darker color making it seem like the classification was better. This is obviously due to the number of data in each class, but then, shouldn't the color depend on the performance of classification and not the number of instances correctly detected ?

I tried normalizing the confusion matrix and it solves the issue, but then I would prefer having a matrix that shows the actual number and not a percentage. Is there any solution for this? Thanks a lot.