I am developing a flask application. It's a simple application with a single endpoint, index (/) for now. In my projects __init__.py file, before importing anything, I monkey patch all the libraries with monkey.patch_all().

Here's what my __init__.py looks like:

# --- imports begin

from gevent import monkey

monkey.patch_all()

from flask.json import JSONEncoder

import os

import logging

from flask import Flask

from mixpanel import Mixpanel

from logging.handlers import TimedRotatingFileHandler

from flask_log_request_id import RequestID, RequestIDLogFilter

from foree.helpers.py23_compatibility import TEXT_ENCODING

# --- imports end

# application objects

app = Flask(__name__)

....

The index route has a single DB call to a collection that is indexed with the key phone_number and my query looks like this: {phone_number: 'XYZ'}. The index route looks like this:

@app.route('/')

def index():

_ = user_by_phone_number('XYZ')

import socket

return jsonify(message='Hello!',

min_version=MIN_VERSION,

max_version=MAX_VERSION,

app_version=SERVER_VERSION,

config_checksum=CONF_CHECKSUM,

environment=DEPLOYMENT,

host=socket.gethostname(),

time=str(datetime.datetime.now()))

And the function user_by_phone_number looks like this:

def user_by_phone_number(phone_number):

db['fpi_user'].find_one({'phone_number': phone_number})

I have a 4 core CPU with 8Gb ram. The server is spawned with:

gunicorn --bind 127.0.0.1:18000 --access-logfile gunicorn-access.log --access-logformat '%(h)s %(l)s %(u)s %(t)s "%(r)s" %(s)s Time: %(T)s' -w 9 --worker-class gevent --worker-connections 1000 foree:app --log-level debug

I then go onto generate some load on this server using locust. Locust runs in distributed mode with 3 slaves and a master (2500 concurrent users with a hatch rate of 200) via locust --no-web -c 2500 -r 200 -H https://myhost.com/ -t 2m --master --expect-slaves=3

The test results in a very low TPS. Upon observing mongostat, I get a number of around ~1K ops/sec on my secondary (replica-set).

On the other hand I tried testing MongoDB with YCSB and surpisingly the results were somewhat good. The average ops/sec on secondary goes to around 21K. Which means the MongoDB is nont being fully utilized (in case of my app server). And yes, my database and app server are on the same network with a ping of less than 1ms so latency can not be an issue here.

Here's how I test with YCSB:

./bin/ycsb run mongodb -s -P workloads/workloadc -threads 20 -p mongodb.url="mongodb://username:pass@<list-of-dbs>/DB?replicaSet=rs0&retryWrites=true&readPreference=secondary&localThresholdMS=30&maxPoolSize=5000" -p operationcount=10000000

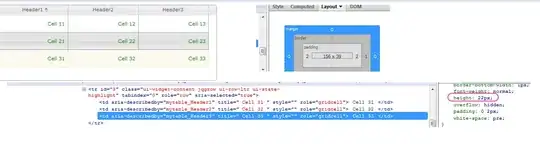

I also tried adding New Relic to my code to find out what's causing the issue and it also complains about the PyMongo's find_one function that I am calling on the index route. Here's the snap:

I have been trying to figure this out for around 3-4 days without any luck. Any leads on what could possibly be wrong here would be much appreciated. Thanks.