I'm training a pretty basic NN over mmnist fashion dataset. I'm using my own code, which is not important. I use a rather simplified algorith similar to ADAM and a cuadratic formula (train_value - real_value)**2 for training and error calculation. I apply a basic back propagation algorith for each weight, and I analyse 1/5 of the network weights for each trainning image. I use only a 128 layer as in the basic example for begginers in tensorflow, plus the input and output layers (the last with softmax and the first normalized to 0-1)

I'm not an expert at all, and I've only been able to train my network up to 77% accuracy over the test set.

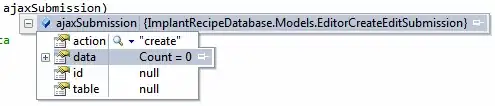

As shown in the image bellow, I detected that the gradients of the weights for most of my neurons converge to cero after a few epochs. But there are few remarkable exceptions that just remain rebel (vertical lines at the first image divide the weights by neuron).

Could you recommend me some general techniques to train rogue neurons without affecting others?