I want to fit my data using two linear functions (broken power law) with one breaking point which is user given. Currently Im using the curve_fit function from the scipy.optimize module. Here are my datasets frequencies, binned data, errors

Here is my code:

import numpy as np

from scipy.optimize import curve_fit

import matplotlib.pyplot as plt

freqs=np.loadtxt('binf11.dat')

binys=np.loadtxt('binp11.dat')

errs=np.loadtxt('bine11.dat')

def brkPowLaw(xArray, breakp, slopeA, offsetA, slopeB):

returnArray = []

for x in xArray:

if x <= breakp:

returnArray.append(slopeA * x + offsetA)

elif x>breakp:

returnArray.append(slopeB * x + offsetA)

return returnArray

#define initial guesses, breakpoint=-3.2

a_fit,cov=curve_fit(brkPowLaw,freqs,binys,sigma=errs,p0=(-3.2,-2.0,-2.0,-2.0))

modelPredictions = brkPowLaw(freqs, *a_fit)

plt.errorbar(freqs, binys, yerr=errs, fmt='kp',fillstyle='none',elinewidth=1)

plt.xlim(-5,-2)

plt.plot(freqs,modelPredictions,'r')

The offset of the second linear function is set to be equal to the offset of the first one.

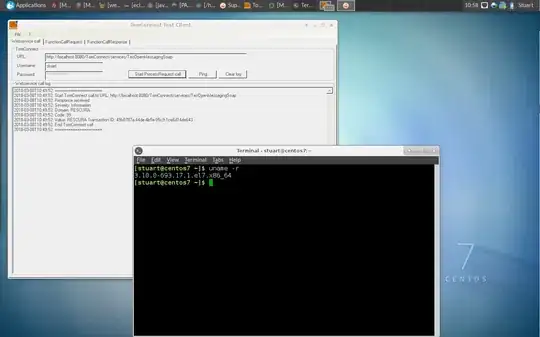

It looks like this works but I get this fit:

Now I thought that the condition in by brkPowLaw function should suffice but it does not. What I want is that the first linear equation is used to fit the data up to a chosen breaking point and then from this breaking point a second linear fit will be done, but without the hump as it shows in the plot because now there it looks like there are two breaking points instead of one and three linear functions for fitting which is not what I expected nor wanted.

What I want is that when the first linear fit ends the second one starts from the point where the first linear fit ended.

I have tried using the numpy.piecewise function with no plausible result, looked into some topics like this or this but I did not manage to make my script work

Thank you for your time