We're using a pretty vanilla instance of Confluent Cloud for internal testing. Because this is cloud-based, they give you statistics on how much data you're going through as the month goes along. Unfortunately, there aren't detailed statistics - just bytes into their instance, bytes out of their instance, and storage. We've transferred in about 2MB of data that's being stored there, but our transfers out are quite excessive, to the tune of about 4GB per day. We don't have many consumers and they're all up to date - there doesn't seem to be anything strange going on where any of the consumers are repeatedly querying from offset 0 or anything like that. My question is: is this typical behavior? Is it due to polling? Or something else?

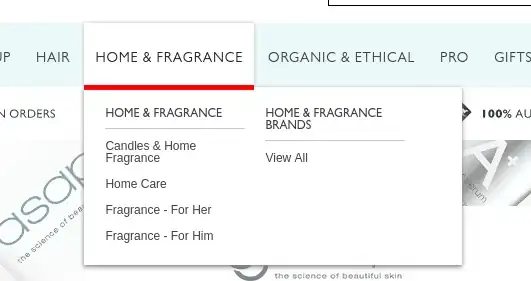

Thanks @riferrei for your comment. I am sorry for the confusion. To try and help clarify, please take a look at this image:

This is all I get. My interpretation is that during March, we stored at least 390 KB worth of data, but not much more (390 KB = 1024 * 1024 * 0.2766 GB-Hours / 31 days / 24 hours). We transferred in 2MB (0.0021 GB), and according to the bill, we transferred out 138 GB of data, or approximately 4 GB per day. I'm trying to understand how that could possibly happen.