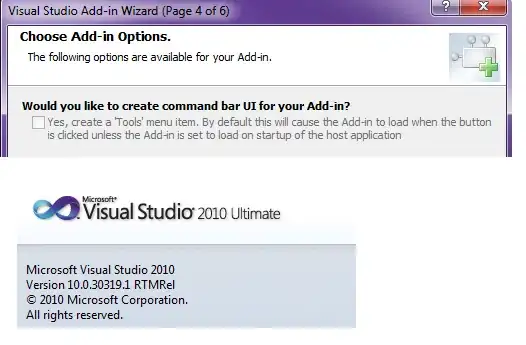

One of my ECS fargate tasks is stopping and restarting in what seems to be a somewhat random fashion. I started the task in Dec 2019 and it has stopped/restarted three times since then. I've found that the task stopped and restarted from its 'Events' log (image below) but there's no info provided as to why it stopped..

So what I've tried to do to date to debug this is

- Checked the 'Stopped' tasks inside the cluster for info as to why it might have stopped. No luck here as it appears 'Stopped' tasks are only held there for a short period of time.

- Checked CloudWatch logs for any log messages that could be pertinent to this issue, nothing found

- Checked CloudTrail event logs for any event pertinent to this issue, nothing found

- Confirmed the memory and CPU utilisation is sufficient for the task, in fact the task never reaches 30% of it's limits

- Read multiple AWS threads about similar issues where solutions mainly seem to be connected to using an ELB which I'm not..

Any have any further debugging device or ideas what might be going on here?