Is there any clustering algorithm or method out there that you can set the minimum and maximum number of data points any cluster should have? Thank you!

Asked

Active

Viewed 202 times

2 Answers

0

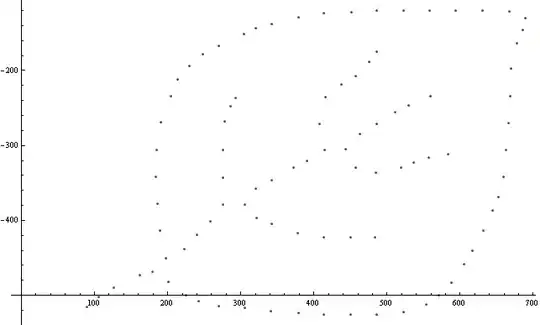

I have never heard of a minimum number, other than 1 or 2, perhaps. You can certainly get an 'optimal' number of clusters, using an Elbow Curve method. Here is a great example, using data from the stock market.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import scipy.optimize as sco

import datetime as dt

import math

from pylab import plot,show

from numpy import vstack,array

from numpy.random import rand

from math import sqrt

from numpy import vstack,array

from datetime import datetime, timedelta

from pandas_datareader import data as wb

from scipy.cluster.vq import kmeans,vq

np.random.seed(777)

start = '2020-1-01'

end = '2020-3-27'

tickers = ['MMM',

'ABT',

'ABBV',

'ABMD',

'ACN',

'ATVI',

'ADBE',

'AMD',

'AAP',

'AES',

'AMG',

'AFL',

'A',

'APD',

'AKAM',

'ALK',

'ALB',

'ARE',

'ALXN',

'ALGN',

'ALLE',

'AGN',

'ADS',

'ALL',

'GOOGL',

'GOOG',

'MO',

'AMZN',

'AEP',

'AXP',

'AIG',

'AMT',

'AWK',

'AMP',

'ABC',

'AME',

'AMGN',

'APH',

'ADI',

'ANSS',

'ANTM',

'AON',

'AOS',

'APA',

'AIV',

'AAPL',

'AMAT',

'APTV',

'ADM',

'ARNC',

'ADSK',

'ADP',

'AZO',

'AVB',

'AVY',

'ZBH',

'ZION',

'ZTS']

thelen = len(tickers)

price_data = []

for ticker in tickers:

prices = wb.DataReader(ticker, start = start, end = end, data_source='yahoo')[['Adj Close']]

price_data.append(prices.assign(ticker=ticker)[['ticker', 'Adj Close']])

df = pd.concat(price_data)

df.dtypes

df.head()

df.shape

pd.set_option('display.max_columns', 500)

df = df.reset_index()

df = df.set_index('Date')

table = df.pivot(columns='ticker')

# By specifying col[1] in below list comprehension

# You can select the stock names under multi-level column

table.columns = [col[1] for col in table.columns]

table.head()

#Calculate average annual percentage return and volatilities over a theoretical one year period

returns = table.pct_change().mean() * 252

returns = pd.DataFrame(returns)

returns.columns = ['Returns']

returns['Volatility'] = table.pct_change().std() * math.sqrt(252)

#format the data as a numpy array to feed into the K-Means algorithm

data = np.asarray([np.asarray(returns['Returns']),np.asarray(returns['Volatility'])]).T

X = data

distorsions = []

for k in range(2, 20):

k_means = kmeans(n_clusters=k)

k_means.fit(X)

distorsions.append(k_means.inertia_)

fig = plt.figure(figsize=(15, 5))

plt.plot(range(2, 20), distorsions)

plt.grid(True)

plt.title('Elbow curve')

[![enter image description here][1]][1]

# computing K-Means with K = 5 (5 clusters)

# computing K-Means with K = 5 (5 clusters)

centroids,_ = KMeans(data,15)

# assign each sample to a cluster

idx,_ = vq(data,centroids)

kmeans = KMeans(n_clusters=15)

kmeans.fit(data)

y_kmeans = kmeans.predict(data)

viridis = cm.get_cmap('viridis', 15)

for i in range(0, len(data)):

plt.scatter(data[i,0], data[i,1], c=viridis(y_kmeans[i]), s= 50)

centers = kmeans.cluster_centers_

plt.scatter(centers[:, 0], centers[:, 1], c='red', s=200, alpha=0.5)

You can do a Google search and find all kinds of info on this concept. Here is one link to get you started.

ASH

- 20,759

- 19

- 87

- 200

-

Thank you for your help :) Yeah, I also looked in to elbow method, but because of the problem I am working on, I need to have certain number of data points in clusters. I tried to look for such algorithms in google but had no luck – Wataru Tamura Mar 31 '20 at 22:34

0

As an aside, you can experiment with Affinity Propogation, which will automatically pick an optimized number of centroids for you.

from sklearn.cluster import AffinityPropagation

from sklearn import metrics

from sklearn.datasets import make_blobs

# #############################################################################

# Generate sample data

centers = [[1, 1], [-1, -1], [1, -1]]

X, labels_true = make_blobs(n_samples=300, centers=centers, cluster_std=0.5,

random_state=0)

# #############################################################################

# Compute Affinity Propagation

af = AffinityPropagation(preference=-50).fit(X)

cluster_centers_indices = af.cluster_centers_indices_

labels = af.labels_

n_clusters_ = len(cluster_centers_indices)

print('Estimated number of clusters: %d' % n_clusters_)

print("Homogeneity: %0.3f" % metrics.homogeneity_score(labels_true, labels))

print("Completeness: %0.3f" % metrics.completeness_score(labels_true, labels))

print("V-measure: %0.3f" % metrics.v_measure_score(labels_true, labels))

print("Adjusted Rand Index: %0.3f"

% metrics.adjusted_rand_score(labels_true, labels))

print("Adjusted Mutual Information: %0.3f"

% metrics.adjusted_mutual_info_score(labels_true, labels))

print("Silhouette Coefficient: %0.3f"

% metrics.silhouette_score(X, labels, metric='sqeuclidean'))

# #############################################################################

# Plot result

import matplotlib.pyplot as plt

from itertools import cycle

plt.close('all')

plt.figure(1)

plt.clf()

colors = cycle('bgrcmykbgrcmykbgrcmykbgrcmyk')

for k, col in zip(range(n_clusters_), colors):

class_members = labels == k

cluster_center = X[cluster_centers_indices[k]]

plt.plot(X[class_members, 0], X[class_members, 1], col + '.')

plt.plot(cluster_center[0], cluster_center[1], 'o', markerfacecolor=col,

markeredgecolor='k', markersize=14)

for x in X[class_members]:

plt.plot([cluster_center[0], x[0]], [cluster_center[1], x[1]], col)

plt.title('Estimated number of clusters: %d' % n_clusters_)

plt.show()

Or, consider using Mean Shift.

import numpy as np

from sklearn.cluster import MeanShift, estimate_bandwidth

from sklearn.datasets import make_blobs

# #############################################################################

# Generate sample data

centers = [[1, 1], [-1, -1], [1, -1], [1, -1], [1, -1]]

X, _ = make_blobs(n_samples=10000, centers=centers, cluster_std=0.2)

# #############################################################################

# Compute clustering with MeanShift

# The following bandwidth can be automatically detected using

bandwidth = estimate_bandwidth(X, quantile=0.6, n_samples=5000)

ms = MeanShift(bandwidth=bandwidth, bin_seeding=True)

ms.fit(X)

labels = ms.labels_

cluster_centers = ms.cluster_centers_

labels_unique = np.unique(labels)

n_clusters_ = len(labels_unique)

print("number of estimated clusters : %d" % n_clusters_)

# #############################################################################

# Plot result

import matplotlib.pyplot as plt

from itertools import cycle

plt.figure(1)

plt.clf()

colors = cycle('bgrcmykbgrcmykbgrcmykbgrcmyk')

for k, col in zip(range(n_clusters_), colors):

my_members = labels == k

cluster_center = cluster_centers[k]

plt.plot(X[my_members, 0], X[my_members, 1], col + '.')

plt.plot(cluster_center[0], cluster_center[1], 'o', markerfacecolor=col,

markeredgecolor='k', markersize=14)

plt.title('Estimated number of clusters: %d' % n_clusters_)

plt.show()

I can't think of anything else that could be relevant. Maybe someone else will jump in here and offer an alternative idea.

ASH

- 20,759

- 19

- 87

- 200