I have a large dataframe containing location data from various ships around the world. imoNois the ship identifier. Below is a sample of the dataframe:

and here is the code to reproduce it:

and here is the code to reproduce it:

# intialise data of lists.

ships_data = {'imoNo':[9321483, 9321483, 9321483, 9321483, 9321483],

'Timestamp':['2020-02-22 00:00:00', '2020-02-22 00:10:00', '2020-02-22 00:20:00', '2020-02-22 00:30:00', '2020-02-22 00:40:00'],

'Position Longitude':[127.814598, 127.805634, 127.805519, 127.808548, 127.812370],

'Position Latitude':[33.800232, 33.801899, 33.798885, 33.795799, 33.792931]}

# Create DataFrame

ships_df = pd.DataFrame(ships_data)

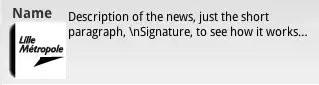

What I need to do is to add a column at the end of the dataframe which will identify the sea_name where the vessel sails. Something like the one below:

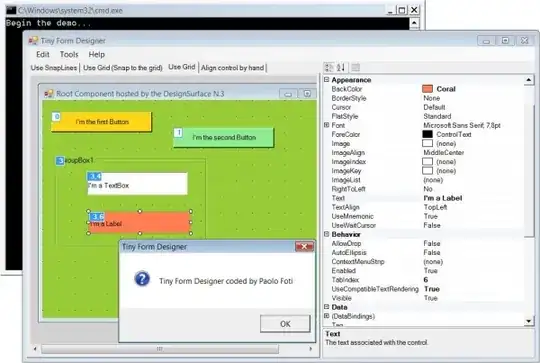

In order to get there, I 've found a dataset in .shp format at this link (IHO Sea Areas v3) which looks like this:

So, the way I do it is going through each (long,lat) of the ships dataset, check in which polygon is that pair within, and finally return the name of the sea of the matching polygon. This is my code:

### Load libraries

import numpy as np

import pandas as pd

import geopandas as gp

import shapely.speedups

from shapely.geometry import Point, Polygon

shapely.speedups.enable()

### Check and map lon lat pair with sea name

def get_seaname(long,lat):

pnt = Point(long,lat)

for i,j in enumerate(iho_df.geometry):

if pnt.within(j):

return iho_df.NAME.iloc[i]

### Apply the above function to the dataframe

ships_df['sea_name'] = ships_df.apply(lambda x: get_seaname(x['Position Longitude'], x['Position Latitude']), axis=1)

However, this is a very time demanding process. I tested in locally on my Mac at the first 1000 rows of ships_df and it took around 1 minute to run. If it grows linearly, then I will need around 14 days for the whole dataset :-D.

Any idea to optimize the function above would be appreciated.

Thank you!