I have a Hadoop+Hive+Tez setup from scratch (meaning I deployed it component by component). Hive is set up using Tez as execution engine.

In its current status, Hive can access table on HDFS, but it can not access table stored on MinIO (using s3a filesystem implementation).

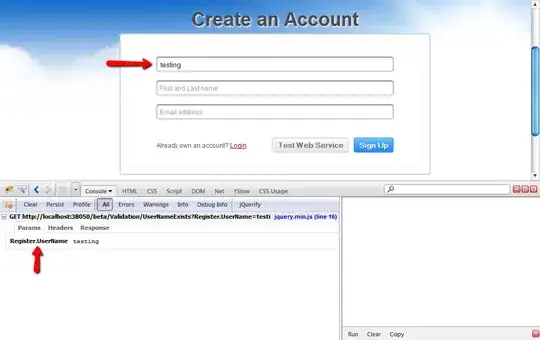

As shows the following screenshot,

when executing

when executing SELECT COUNT(*) FROM s3_table,

- Tez execution stuck forever

Map 1always inINITIALIZINGstateMap 1always has a total count of-1and pending count of-1. (why-1?)

Things already checked:

- Hadoop can access MinIO/S3 without problem. For example,

hdfs dfs -ls s3a://bucketnameworks well. - Hive-on-Tez can compute against tables on HDFS, with mappers and reducers generated successfully and quickly.

- Hive-on-MR can compute against tables on MinIO/S3 without problem.

What could be the possible causes for this problem?

Version informations:

- Hadoop 3.2.1

- Hive 3.1.2

- Tez 0.9.2

- MinIO RELEASE.2020-01-25T02-50-51Z