I have a Flink Cluster. I enabled the compaction filter and using state TTL. but Rocksdb Compaction Filter does not free states from memory.

I have about 300 record / s in my Flink Pipeline

My state TTL config:

@Override

public void open(Configuration parameters) throws Exception {

ListStateDescriptor<ObjectNode> descriptor = new ListStateDescriptor<ObjectNode>(

"my-state",

TypeInformation.of(new TypeHint<ObjectNode>() {})

);

StateTtlConfig ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(600))

.cleanupInRocksdbCompactFilter(2)

.build();

descriptor.enableTimeToLive(ttlConfig);

myState = getRuntimeContext().getListState(descriptor);

}

flink-conf.yaml:

state.backend: rocksdb

state.backend.rocksdb.ttl.compaction.filter.enabled: true

state.backend.rocksdb.block.blocksize: 16kb

state.backend.rocksdb.compaction.level.use-dynamic-size: true

state.backend.rocksdb.thread.num: 4

state.checkpoints.dir: file:///opt/flink/checkpoint

state.backend.rocksdb.timer-service.factory: rocksdb

state.backend.rocksdb.checkpoint.transfer.thread.num: 2

state.backend.local-recovery: true

state.backend.rocksdb.localdir: /opt/flink/rocksdb

jobmanager.execution.failover-strategy: region

rest.port: 8081

state.backend.rocksdb.memory.managed: true

# state.backend.rocksdb.memory.fixed-per-slot: 20mb

state.backend.rocksdb.memory.write-buffer-ratio: 0.9

state.backend.rocksdb.memory.high-prio-pool-ratio: 0.1

taskmanager.memory.managed.fraction: 0.6

taskmanager.memory.network.fraction: 0.1

taskmanager.memory.network.min: 500mb

taskmanager.memory.network.max: 700mb

taskmanager.memory.process.size: 5500mb

taskmanager.memory.task.off-heap.size: 800mb

metrics.reporter.influxdb.class: org.apache.flink.metrics.influxdb.InfluxdbReporter

metrics.reporter.influxdb.host: ####

metrics.reporter.influxdb.port: 8086

metrics.reporter.influxdb.db: ####

metrics.reporter.influxdb.username: ####

metrics.reporter.influxdb.password: ####

metrics.reporter.influxdb.consistency: ANY

metrics.reporter.influxdb.connectTimeout: 60000

metrics.reporter.influxdb.writeTimeout: 60000

state.backend.rocksdb.metrics.estimate-num-keys: true

state.backend.rocksdb.metrics.num-running-compactions: true

state.backend.rocksdb.metrics.background-errors: true

state.backend.rocksdb.metrics.block-cache-capacity: true

state.backend.rocksdb.metrics.block-cache-pinned-usage: true

state.backend.rocksdb.metrics.block-cache-usage: true

state.backend.rocksdb.metrics.compaction-pending: true

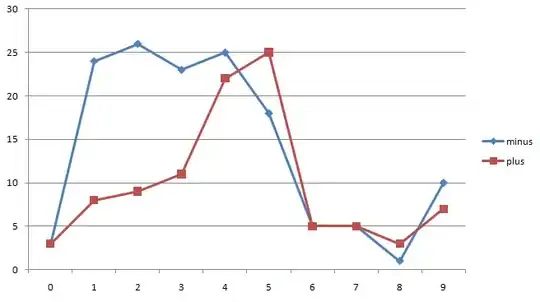

Monitoring by Influxdb and Grafana: