Short Answer:

You don't need to load everything in memory because this process can be particularly slow for large document collections (worse, the inverted index may not even fit in memory).

Long Answer:

The inverted index is typically stored on the disk and is loaded on a dynamic basis depending on the query... e.g. if the query is "stack overflow", you hit on the individual lists corresponding to the terms 'stack' and 'overflow'...

The file structure for an inverted list is a mix of both fixed length and variable length components. Variable length information is stored as pointers.

Since terms (essentially strings) are of variable length, they are converted to integers (fixed length of 4/8 bytes). The mapping is usually stored in-memory as a hash-table (#terms is usually not that large in the order of 100K which easily fits in memory).

Given a term you have to look it up on the in-mem hashtable and get its id. You then use the id to directly jump (random access with offset) to its location on disk. This location contains a pointer to the list of documents containing that term (this list is variable length), which you have to load in memory.

Once you load the postings for all query terms (usually not a large number), you could aggregate the scores for all documents by walking through these lists (usually these lists are sorted by document ids).

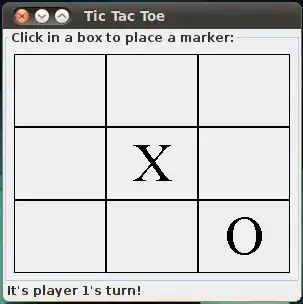

A schematic diagram of the above description: