My background is more from the Twitter side where all stats are recorded minutely so you might have 120 request per minute. Inside twitter someone had the bright idea to divide by 60 so most graphs(except some teams who realize dividing by 60 is NOT the true rps at all since in a minute, that will fluctuate). So instead of 120 request per minute, many graphs report out 2 request per second. In google, seems like they are doing the same EXCEPT the math is not showing that. In twitter, we could multiply by 60 and the answer was always a whole integer of how many requests occurred in that minute.

In Google however, we see 0.02 requests / second which if we multiply by 60 is 1.2 request per minute. IF they are a minute granularity, they are definitely counting it wrong or something is wrong with their math.

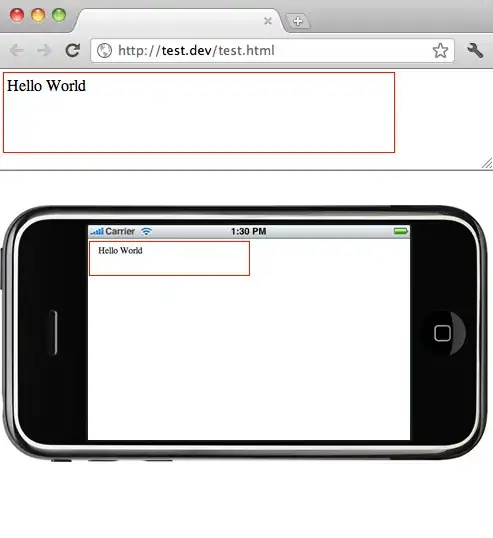

This is from cloudrun metrics as we click into the instance itself

What am I missing here? AND BETTER yet, can we please report on request per minute. request per second is really the average req/second for that minute and it can be really confusing to people when we have these discussions of how you can get 0.5 request / second.

I AM assuming that this is not request per second 'at' the minute boundary because that would be VERY hard to calculate BUT would also be a whole number as well...ie. 0 requests or 1, not 0.2 and that would be quite useless to be honest.

EVERY cloud run instance creates this chart so I assume it's the same for everyone but if I click 'view in metrics explorer' it then give this picture on how 'google configured it'....