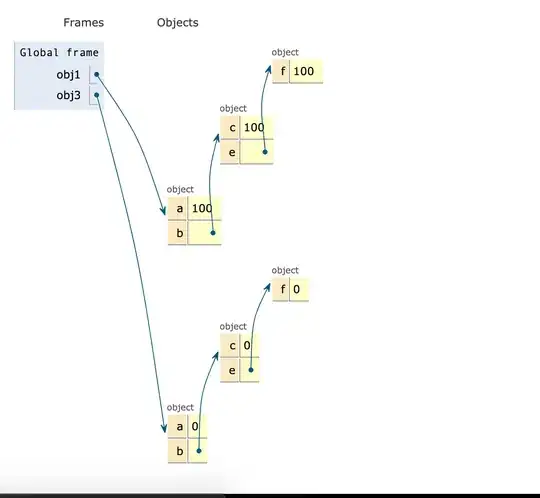

I have a simple Flink job that reads from an ActiveMQ source & sink to a database & print. I deploy the job in the Kubernetes with 2 TaskManagers, each having Task Slots of 10 (taskmanager.numberOfTaskSlots: 10). I configured parallelism more than the total TaskSlots available (ie., 10 in this case).

When I see the Flink Dashboard I see this job runs only in one of the TaskManager, but the other TaskManager has no Jobs. I verified this by checking every operator where it is scheduled, also in Task Manager UI page one of the manager has all slots free. I attach below images for reference.

Did I configure anything wrong? Where is the gap in my understanding? And can someone explain it?