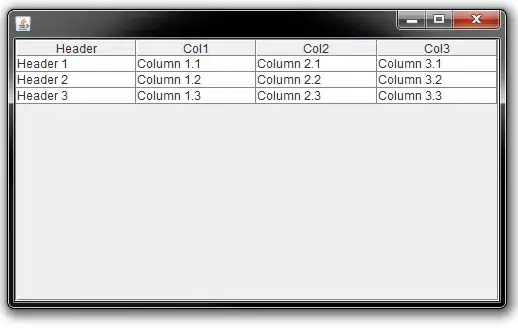

I have a histogram generated by matplotlib and i have been using sklearn metrics to calculate the precision-recall curve. This is a plot showing the positive predictive value (PPV) of a histogram in dependency of the recall. This is the histogram:

The generated curve takes the following form:

I thought that the negative predictive value (NPV) is the inverse of the PPV so my guess was to simply do NPV = 1 - PPV but that didnt work out pretty much. So far I have been using the functions from the metrics library from the sklearn module to generate ROC curves and the precision-recall curve. But I havent found any specific curve in metrics so far which can do such thing like negative predictive value. This is the source code i have been using to generate curves from the histogram:

import numpy as np

import matplotlib.mlab as mlab

import matplotlib.pyplot as plt

import pylab

from sklearn import metrics

data1 = np.loadtxt('1.txt')

data2 = np.loadtxt('2.txt')

x = np.transpose(data1)[1]

y = np.transpose(data2)[1]

background = (1 + y)/2

signal = (1 + x)/2

classifier_output = np.concatenate([background,signal])

true_value = np.concatenate([np.zeros_like(background, dtype=int), np.ones_like(signal, dtype=int)])

precision, recall, threshold = metrics.precision_recall_curve(true_value, classifier_output)

plt.plot(recall, precision)

plt.show()

Is there any other way in metrics or in general to calculate the NPV of this a histogram like this one?