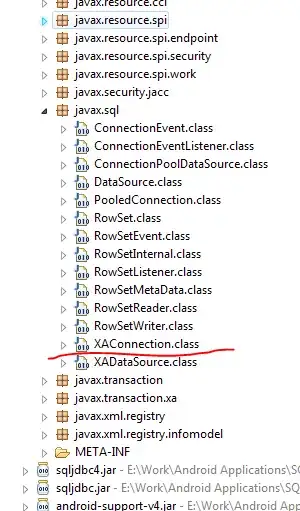

I am doing classification on a dataset with three classes (Labels Low, Medium, High).

I run the following code to get my confusion matrix:

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred)

And I get the following output for cm:

array([[18, 10],

[ 7, 61]], dtype=int64)

What does this output mean? I read the following link but didn't understood Confusion Matrix and Class Statistics