I am training a neural network to predict a binary mask on mouse brain images. For this I am augmenting my data with the ImageDataGenerator from keras.

But I have realized that the Data Generator is interpolating the data when applying spatial transformations.

This is fine for the image, but I certainly do not want my mask to contain non-binary values.

Is there any way to choose something like a nearest neighbor interpolation when applying the transformations? I have found no such option in the keras documentation.

(To the left is the original binary mask, to the right is the augmented, interpolated mask)

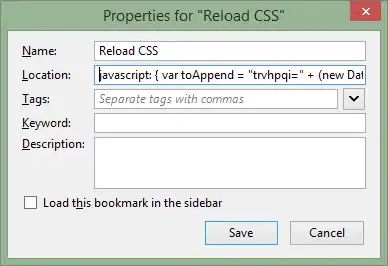

Code for the images:

data_gen_args = dict(rotation_range=90,

width_shift_range=30,

height_shift_range=30,

shear_range=5,

zoom_range=0.3,

horizontal_flip=True,

vertical_flip=True,

fill_mode='nearest')

image_datagen = kp.image.ImageDataGenerator(**data_gen_args)

image_generator = image_datagen.flow(image, seed=1)

plt.figure()

plt.subplot(1,2,1)

plt.imshow(np.squeeze(image))

plt.axis('off')

plt.subplot(1,2,2)

plt.imshow(np.squeeze(image_generator.next()[0]))

plt.axis('off')

plt.savefig('vis/keras_example')