I'm testing the MFCC feature from tensorflow.signal implementation. According to the example (https://www.tensorflow.org/api_docs/python/tf/signal/mfccs_from_log_mel_spectrograms), it is computing all 80 mfccs and then taking the first 13.

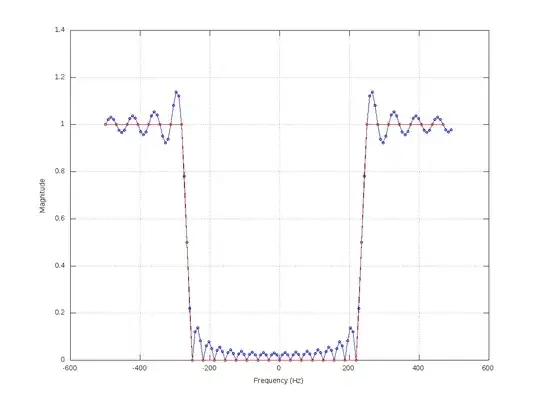

I have tried both above and "compute first 13 directly" approach and the result is very different:

All 80 first, then take first 13:

Why the big difference, and which one should I use if I'm passing this as feature to CNN or RNN?