I am running 3 different model (Random forest, Gradient Boosting, Ada Boost) and a model ensemble based on these 3 models.

I managed to use SHAP for GB and RF but not for ADA with the following error:

Exception Traceback (most recent call last)

in engine

----> 1 explainer = shap.TreeExplainer(model,data = explain_data.head(1000), model_output= 'probability')

/home/cdsw/.local/lib/python3.6/site-packages/shap/explainers/tree.py in __init__(self, model, data, model_output, feature_perturbation, **deprecated_options)

110 self.feature_perturbation = feature_perturbation

111 self.expected_value = None

--> 112 self.model = TreeEnsemble(model, self.data, self.data_missing)

113

114 if feature_perturbation not in feature_perturbation_codes:

/home/cdsw/.local/lib/python3.6/site-packages/shap/explainers/tree.py in __init__(self, model, data, data_missing)

752 self.tree_output = "probability"

753 else:

--> 754 raise Exception("Model type not yet supported by TreeExplainer: " + str(type(model)))

755

756 # build a dense numpy version of all the tree objects

Exception: Model type not yet supported by TreeExplainer: <class 'sklearn.ensemble._weight_boosting.AdaBoostClassifier'>

I found this link on Git that state

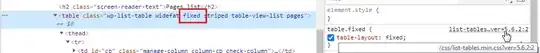

TreeExplainer creates a TreeEnsemble object from whatever model type we are trying to explain, and then works with that downstream. So all you would need to do is and add another if statement in the

TreeEnsemble constructor similar to the one for gradient boosting

But I really don't know how to implement it since I quite new to this.