I have about 100k arrays of size 256 which I would like to input in a neural network composed by few dense layers, and to output 100k arrays of again size 256. (I would like my net to transform the input array into the output array). I cannot manage to set it up correctly.

My X_train and y_train have shape (98304, 256), my X_test and y_test (16384, 256).

My network at the moment is

model = Sequential()

model.add(Dense(1, input_shape=(256,), activation='relu'))

model.add(Dense(1024, activation='relu'))

model.add(Dense(512, activation='relu'))

model.add(Dense(1024, activation='relu'))

model.add(Dense(256, activation='linear'))

optimizer = Adam()

model.compile(optimizer=optimizer,loss='mean_squared_error',metrics=['accuracy', 'mae'])

The network actually runs, but it does not give any meaningful result. It stops after 20 epochs because I give it the early stopping.

Epoch 00019: val_loss did not improve from -inf

Epoch 20/200

6400/6400 [==============================] - 1s 232us/step - loss: nan - acc: 0.2511 - mean_absolute_error: nan - val_loss: nan - val_acc: 0.2000 - val_mean_absolute_error: nan

And if I try to use it to predict, I only get nan values (I do not have any nan in my training set).

Hope someone can help me with this. Thanks in advance.

Edit To check whether is a problem with the inputs or the algorithm, I have tried creating my inputs and targets using the following code

X_train=[]

y_train=[]

for it in range(1000):

beginning=random.uniform(0,1)

end=random.uniform(0,1)

X_train.append([beginning+(end-beginning)*jt/256 for jt in range(256)])

y_train.append([end+(beginning-end)*jt/256 for jt in range(256)])

X_train=np.array(X_train)

y_train=np.array(y_train)

And I still get

Epoch 27/200

1000/1000 [==============================] - 0s 236us/step - loss: nan - acc: 0.4970 - mean_absolute_error: nan

Edit2: If I increase the complexity of my network I manage to get a loss different from nan using the 10k training arrays created using the fuction above. However, the results are still quite bad which makes me wonder I am not setting up the network correctly.

The new network:

model = Sequential()

model.add(Dense(1, input_shape=(256,), activation='relu'))

model.add(Dense(2048, activation='relu'))

model.add(Dense(2048, activation='relu'))

model.add(Dense(2048, activation='relu'))

model.add(Dense(256, activation='linear'))

optimizer = Adam()

model.compile(optimizer=optimizer,loss='mean_squared_error',metrics=['mae'])

model.summary()

And the result when they converge

Epoch 33/200

10000/10000 [==============================] - 23s 2ms/step - loss: 0.0561 - mean_absolute_error: 0.2001 - val_loss: 0.0561 - val_mean_absolute_error: 0.2001

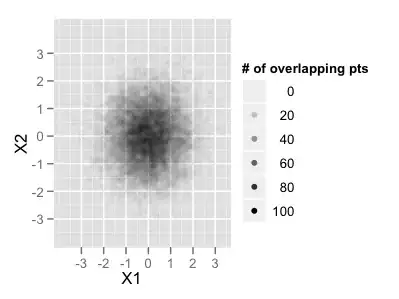

If I check the output of the network, I always obtain a vector with all points around 0.5 regardless of the input.

Also, if I try to predict a single vector using y_pred=model.predict(Xval[3]) I get the error

ValueError: Error when checking : expected dense_27_input to have shape (256,) but got array with shape (1,)