I need to perform a classification of users using binary classification (User 1 or 0 in each case).

I have 30 users and there are 30 sets of FPR and TPR.

I did not use roc_curve(y_test.ravel(), y_score.ravel()) to get FPR and TPF (there is a reason for this which I have to classify each of them using binary classification and generate FPR Aand TPF using my own code).

Actually, my setting was I did not store class labels as multi-class. What I did was I take one user as a positive class and the rest as negative class. I repeated for all other users. Then I calculated FPR and TPF using my own code without using roc_auc_score.

Let say I already have the values of FPR and TPF in alist.

I have these codes:

from sklearn.metrics import roc_curve, auc

import matplotlib.pyplot as plt

from scipy import interp

n_classes=30

# First aggregate all false positive rates

all_fpr = np.unique(np.concatenate([fpr_svc[i] for i in range(n_classes)])) # Classified using SVC

# Then interpolate all ROC curves at this points

mean_tpr = np.zeros_like(all_fpr)

for i in range(n_classes):

mean_tpr += interp(all_fpr, fpr_svc[i], tpr_svc[i])

# Finally average it and compute AUC

mean_tpr /= n_classes

fpr = all_fpr[:]

tpr = mean_tpr[:]

plt.figure()

lw = 2

plt.plot(fpr, tpr, color='darkorange',

lw=lw)

plt.plot([0, 1], [0, 1], color='navy', lw=lw, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Acceptance Rate')

plt.ylabel('True Acceptance Rate')

plt.title('Receiver operating characteristic example')

plt.legend(loc="lower right")

plt.show()

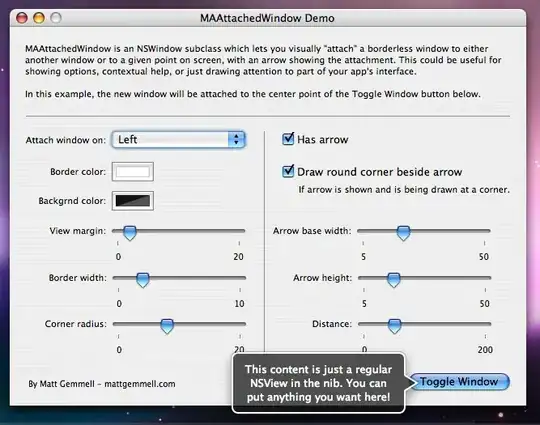

But, it produced this figure which is look weird.

Moreover, how do I get the average AUC as well?