Following this post and my inability to understand the Johnson-Neyman [JN] interval calculation method, I'm thinking of moving out of the box and consider other algorithms to do trivariate (multivariate between three variables) correlation analysis. Consider the below Pandas data frame:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from scipy.stats import pearsonr, spearmanr, kendalltau, pointbiserialr

np.random.seed(42)

x1 = np.random.rand(10) * 10

x2 = np.random.rand(10) * 10

x3 = np.random.rand(10) * 10

x = np.concatenate((x1, x2, x3))

y1 = 1.5 * x1 + (np.random.rand(10) - 0.5) * 3.5

y2 = 0.5 * x2 + (np.random.rand(10) - 0.5) * 4.1

y3 = 3.0 * x3 + (np.random.rand(10) - 0.5) * 2.4

y = np.concatenate((y1, y2, y3))

M = [1.5, 5.5]

m1 = np.random.rand(10) * M[0]

m2 = np.random.rand(10) * (M[1] - M[0]) + M[0]

m3 = np.random.rand(10) * 2 + M[1]

m = np.concatenate((m1, m2, m3))

df = pd.DataFrame({'X': x, 'Y': y, 'M': m})

df = df.sample(frac=1).reset_index(drop=True)

Now I need to have a function of:

classify_moderator(dataframe=df, predictor="x", response="y", moderator="m")

which returns a list of one or more values for m which partitions the data frame into two or more significant correlation groups. For example, for the above data frame, we should get M = [1.5, 5.5], wherein the groups of df["m"] < M[0], M[0] < df["m"] < M[1], and M[1] < df["m"] the association between subgroups of df["x"] and df["y"] is stronger than the total data frame in terms of bivariate correlations analysis algorithms, such as Pearson, Kendall, Spearman, and Point-Biserial methods. It is important to consider that we don't know exactly how many partitions of interest are there. It can be two or more. But if it makes the method unnecessarily complicated we can assume the number as well.

I prefer conventional methods (e.g., scipy.optimize module) over heuristic ones as they help me better understand the underlying math but otherwise is also appreciated. Thanks for your support in advance.

P.S.1. Here my first failed attempt to group into two:

def groupedCor(modValue, dataframe, predictor, response, moderator):

df1 = dataframe.loc[dataframe[moderator] < modValue]

df2 = dataframe.loc[modValue <= dataframe[moderator]]

return pearsonr(df1[predictor], df1[response])[1] + pearsonr(df2[predictor], df2[response])[1]

M1 = (df["M"].max() - df["M"].min()) / 2 + df["M"].min()

M1 = fmin(groupedCor, x0=M1, args=(df, "X", "Y", "M"))

as an example of what I'm trying to do. If I could understand why the above snippet fails by:

ValueError: Lengths must match to compare

then I could probably solve the problem myself.

P.S.2. I realized that that none of the bivariate correlation analysis methods are representative of a good fit. So I used the r-squared of a linear regression:

from sklearn.linear_model import LinearRegression

def groupedScore(modValue, dataframe, predictor, response, moderator):

df1 = dataframe[dataframe[moderator] < modValue]

df2 = dataframe[modValue <= dataframe[moderator]]

x1 = df1[predictor].values

x1_ = x1.reshape((-1, 1))

y1 = df1[response].values

model1 = LinearRegression().fit(x1_, y1)

x2 = df2[predictor].values

x2_ = x2.reshape((-1, 1))

y2 = df2[response].values

model2 = LinearRegression().fit(x2_, y2)

return (model1.score(x1_, y1) + model2.score(x2_, y2)) / 2

def groupedScore_v(x, dataframe, predictor, response, moderator):

return [[groupedScore(xi, dataframe, predictor, response, moderator) for xi in x[2:-1]], x[2:-1]]

scores = groupedScore_v(np.sort(df["M"].values).tolist(), df, "X", "Y", "M")

plt.plot(np.asarray(scores[1]), np.asarray(scores[0]))

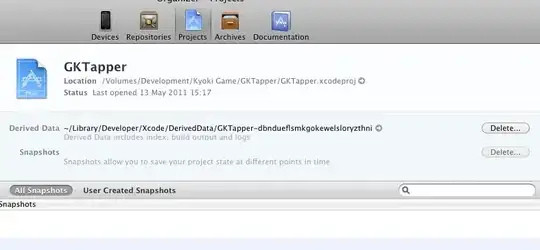

which results in:

which is a very strang result!