So I have been experimenting with both Keras' Model.fit() and the low-level TF GradientTape for optimising the trainable parameters of a neural network and noticed that the Keras version is significantly better.

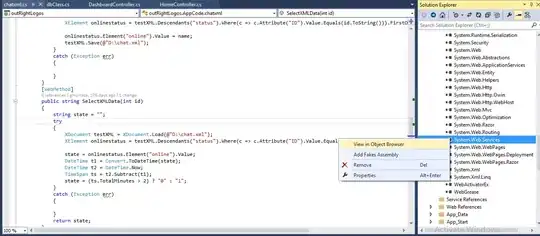

Code for the Keras Optimised Version where the final MSE is :

from tensorflow import keras

import tensorflow as tf

from sklearn.datasets import load_boston

X,y = load_boston(return_X_y=True)

X_tf = tf.cast(X, dtype=tf.float32)

model = keras.Sequential()

model.add(keras.layers.Dense(100, activation = 'relu', input_shape = (13,)), )

model.add(keras.layers.Dense(100, activation = 'relu'))

model.add(keras.layers.Dense(100, activation = 'relu'))

model.add(keras.layers.Dense(1, activation = 'linear'))

model.compile(optimizer = tf.keras.optimizers.Adam(0.01),

loss = tf.keras.losses.MSE

)

model.fit(X, y, epochs=1000)enter code here

However, when I optimise the Keras model with tf.GradientTape as shown in the following code:

from tensorflow import keras

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import load_boston

X,y = load_boston(return_X_y=True)

X_tf = tf.cast(X, dtype=tf.float32)

model = keras.Sequential()

model.add(keras.layers.Dense(100, activation = 'relu', input_shape = (np.shape(X)[1],)),

)

model.add(keras.layers.Dense(100, activation = 'relu'))

model.add(keras.layers.Dense(100, activation = 'relu'))

model.add(keras.layers.Dense(1, activation = 'linear'))

optimizer = tf.keras.optimizers.Adam(learning_rate = 0.01)

def loss_func(pred, target):

return tf.reduce_mean(tf.square(pred - target))

trainable_params = model.trainable_variables

def train_step():

with tf.GradientTape() as tape:

y_tild = model(X_tf)

loss = loss_func(y_tild, y)

grads = tape.gradient(loss, trainable_params)

optimizer.apply_gradients(zip(grads, trainable_params))

print("Loss : " + str(loss.numpy()))

epochs = 1000

for ii in range(epochs):

train_step()

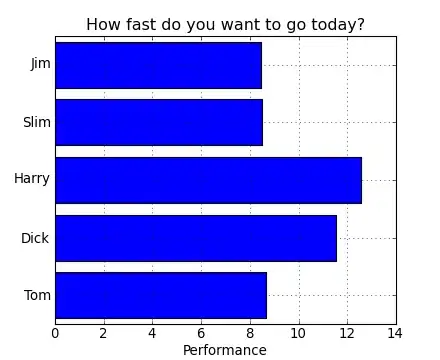

And obtain the following graph for the deviation values.

You can notice that the values in the Keras fit version are more closer to the actual values than the ones obtained using GradientTape. Also, Gradient Tape values also ended up not varying much for different inputs and worked around a mean, while the Keras one exhibited a lot more diversity.

So how do I use GradientTape low level API to have comparable performances with that of the Keras High Level API ? What is it that Model.fit is doing that outperforms my implementation so much ? I tried going through the source code and couldn't essentially pin it down.

Thanks in advance.