My first thought is to put on a Gaussian blur for a sort of "unsharp filter". (I think my second idea is better; it combines this blur-and-add with the erosion/dilation game. I posted it as a separate answer, because I think it is a different-enough strategy to merit that.) @eldesgraciado noted frequency stuff, which is basically what we're doing here. I'll put on some code and explanation. (Here is one answer to an SO post that has a lot about sharpening - the answer linked is a more variable unsharp mask written in Python. Do take the time to look at other answers - including this one, one of many simple implementations that look just like mine - though some are written in different programming languages.) You'll need to mess with parameters. It's possible this won't work, but it's the first thing I thought of.

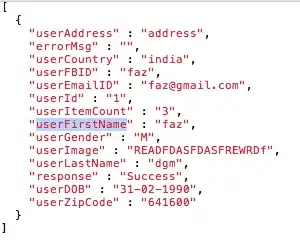

>>> import cv2

>>> im_0 = cv2.imread("FWM8b.png")

>>> cv2.imshow("FWM8b.png", im_0)

>>> cv2.waitKey(0)

## Press any key.

>>> ## Here's where we get to frequency. We'll use a Gaussian Blur.

## We want to take out the "frequency" of changes from white to black

## and back to white that are less than the thickness of the "1973"

>>> k_size = 0 ## This is the kernal size - the "width frequency",

## if you will. Using zero gives a width based on sigmas in

## the Gaussian function.

## You'll want to experiment with this and the other

## parameters, perhaps trying to run OCR over the image

## after each combination of parameters.

## Hint, avoid even numbers, and think of it as a radius

>>> gs_border = 3

>>> im_blurred = cv2.GaussianBlur(im_0, (k_size, k_size), gs_border)

>>> cv2.imshow("gauss", im_blurred)

>>> cv2.waitKey(0)

Okay, my parameters probably didn't blur this enough. The parts of the words that you want to get rid of aren't really blurry. I doubt you'll even see much of a difference from the original, but hopefully you'll get the idea.

We're going to multiply the original image by a value, multiply the blurry image by a value, and subtract value*blurry from value*orig. Code will be clearer, I hope.

>>> orig_img_multiplier = 1.5

>>> blur_subtraction_factor = -0.5

>>> gamma = 0

>>> im_better = cv2.addWeighted(im_0, orig_img_multiplier, im_blurred, blur_subtraction_factor, gamma)

>>> cv2.imshow("First shot at fixing", im_better)

Yeah, not too much different. Mess around with the parameters, try to do the blur before you do your adaptive threshold, and try some other methods. I can't guarantee it will work, but hopefully it will get you started going somewhere.

Edit

This is a great question. Responding to the tongue-in-cheek criticism of @eldesgraciado

Ah, naughty, naughty. Trying to break them CAPTCHA codes, huh? They are difficult to break for a reason. The text segmentation, as you see, is non-trivial. In your particular image, there’s a lot of high-frequency noise, you could try some frequency filtering first and see what result you get.

I submit the following from the Wikipedia article on reCAPTCHA (archived).

reCAPTCHA has completely digitized the archives of The New York Times and books from Google Books, as of 2011.three The archive can be searched from the New York Times Article Archive.four Through mass collaboration, reCAPTCHA was helping to digitize books that are too illegible to be scanned by computers, as well as translate books to different languages, as of 2015.five

Also look at this article (archived).

I don't think this CAPTCHA is part of Massive-scale Online Collaboration, though.

Edit: Some other type of sharpening will be needed. I just realized that I'm applying 1.5 and -0.5 multipliers to pixels which usually have values very close to 0 or 255, meaning I'm probably just recovering the original image after the sharpening. I welcome any feedback on this.

Also, from comments with @eldesgracio:

Someone probably knows a better sharpening algorithm than the one I used. Blur it enough, and maybe threshold on average values over an n-by-n grid (pixel density). I don't know to much about the whole adaptive-thresholding-then-contours thing. Maybe that could be re-done after the blurring...

Just to give you some ideas ...

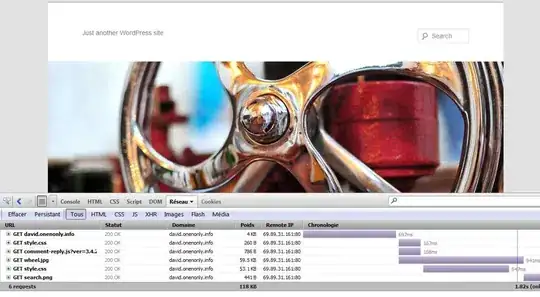

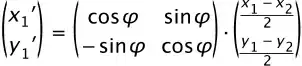

Here's a blur with k_size = 5

Here's a blur with k_size = 25

Note those are the BLURS, not the fixes. You'll likely need to mess with the orig_img_multiplier and blur_subtraction_factor based on the frequency (I can't remember exactly how, so I can't really tell you how it's done.) Don't hesitate to fiddle with gs_border, gamma, and anything else you might find in the documentation for the methods I've shown.

Good luck with it.

By the way, the frequency is more something based on the 2-D Fast Fourier Transform, and possibly based on kernel details. I've just messed around with this stuff myself - definitely not an expert AND definitely happy if someone wants to give more details - but I hope I've given a basic idea. Adding some jitter noise (up and down or side to side blurring, rather than radius-based), might be helpful as well.