In attempt to setup airflow logging to localstack s3 buckets, for local and kubernetes dev environments, I am following the airflow documentation for logging to s3. To give a little context, localstack is a local AWS cloud stack with AWS services including s3 running locally.

I added the following environment variables to my airflow containers similar to this other stack overflow post in attempt to log to my local s3 buckets. This is what I added to docker-compose.yaml for all airflow containers:

- AIRFLOW__CORE__REMOTE_LOGGING=True

- AIRFLOW__CORE__REMOTE_BASE_LOG_FOLDER=s3://local-airflow-logs

- AIRFLOW__CORE__REMOTE_LOG_CONN_ID=MyS3Conn

- AIRFLOW__CORE__ENCRYPT_S3_LOGS=False

I've also added my localstack s3 creds to airflow.cfg

[MyS3Conn]

aws_access_key_id = foo

aws_secret_access_key = bar

aws_default_region = us-east-1

host = http://localstack:4572 # s3 port. not sure if this is right place for it

Additionally, I've installed apache-airflow[hooks], and apache-airflow[s3], though it's not clear which one is really needed based on the documentation.

I've followed the steps in a previous stack overflow post in attempt verify if the S3Hook can write to my localstack s3 instance:

from airflow.hooks import S3Hook

s3 = S3Hook(aws_conn_id='MyS3Conn')

s3.load_string('test','test',bucket_name='local-airflow-logs')

But I get botocore.exceptions.NoCredentialsError: Unable to locate credentials.

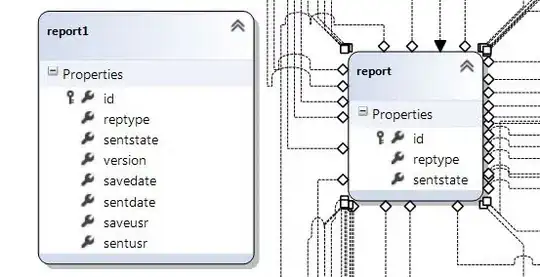

After adding credentials to airflow console under /admin/connection/edit as depicted:

this is the new exception,

this is the new exception, botocore.exceptions.ClientError: An error occurred (InvalidAccessKeyId) when calling the PutObject operation: The AWS Access Key Id you provided does not exist in our records. is returned. Other people have encountered this same issue and it may have been related to networking.

Regardless, a programatic setup is needed, not a manual one.

I was able to access the bucket using a standalone Python script (entering AWS credentials explicitly with boto), but it needs to work as part of airflow.

Is there a proper way to set up host / port / credentials for S3Hook by adding MyS3Conn to airflow.cfg?

Based on the airflow s3 hooks source code, it seems a custom s3 URL may not yet be supported by airflow. However, based on the airflow aws_hook source code (parent) it seems it should be possible to set the endpoint_url including port, and it should be read from airflow.cfg.

I am able to inspect and write to my s3 bucket in localstack using boto alone. Also, curl http://localstack:4572/local-mochi-airflow-logs returns the contents of the bucket from the airflow container. And aws --endpoint-url=http://localhost:4572 s3 ls returns Could not connect to the endpoint URL: "http://localhost:4572/".

What other steps might be needed to log to localstack s3 buckets from airflow running in docker, with automated setup and is this even supported yet?