I have a dataset that has one row per member and per transaction, and there are different stores the purchase could have came from 'brand_id'. I want to use featuretools to make output that would have one row per member, with an aggregate of 'revenue' by each brand id.

What I want:

import featuretools as ft

import pandas as pd

df = pd.DataFrame({'member_id': [1,1,1,1,2,2,3,4,4,4,4,5,5,5],

'transaction_id': [1,2,3,4,5,6,7,8,9,10,11,12,13,14],

'brand_id': ['A','A','B','B','B','B','A','B','A','B','B','A','B','A'],

'revenue': [32,124,54,12,512,51,12,4,12,412,512,14,89,12]

})

df2 = df.groupby(

['member_id',

'brand_id']

).agg({

'transaction_id': 'count',

'revenue' :['sum', 'mean']}

).reset_index()

df2.columns = ['member_id', 'brand_id', 'transactions', 'revenue_sum', 'revenue_mean']

df2 = df2.pivot(index='member_id',

columns='brand_id',

values=['transactions',

'revenue_sum',

'revenue_mean']

).fillna(0

).reset_index()

groups = ['A', 'B']

df2.columns = ['memberid'] + \

[x + '_transactions_count' for x in groups] + \

[x + '_revenue_sum' for x in groups] + \

[x + '_revenue_mean' for x in groups]

Here is what the output looks like:

Here is my attempt using feature tools, but no matter what I have tried I cannot get it to create new variables broken out by each unique value of 'brand_id'.

es = ft.EntitySet(id = 'my_set')

es.entity_from_dataframe(entity_id='members',

index='member_id',

dataframe = pd.DataFrame({'member_id':[1,2,3,4,5]})

)

es.entity_from_dataframe(entity_id='trans',

index='transaction_id',

variable_types = {'brand_id': ft.variable_types.Id},

dataframe=df.copy()

)

# create the relationship

r_member_accrual = ft.Relationship(es['members']['member_id'],

es['trans']['member_id'])

# add the relationship to the entity set

es = es.add_relationship(r_member_accrual)

fm, fl = ft.dfs(target_entity='members',

entityset=es,

agg_primitives=['sum','mean','count'],

groupby_trans_primitives=["cum_sum"],

primitive_options={

'cum_sum': {

'ignore_groupby_variables': {'trans':['member_id']}

}

}

)

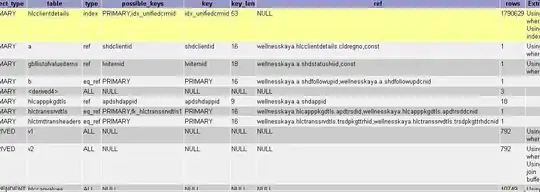

Here is the result that I get, which is not what I am going for.