I created a tf.estimator model and a tf.data pipeline in Python and saved it in tf.saved_model format in TF 2.1. As tfjs-node does not support int64 or float64 types, it is unable to load the model.

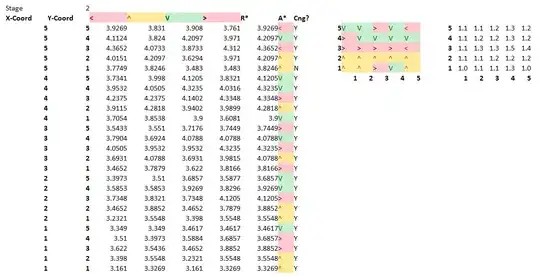

On Tensorboard, I observed some input pipeline Python variables are auto-declared as 64 bit types.

For example, batch_size and epochs above. How can I avoid this problem and load tf.estimator model in tfjs-node without conversion?

To reproduce,

- Run the node.js application hosted at https://repl.it/@NitinPasumarthy/BinaryClassificationTFJSNode

- https://colab.research.google.com/drive/1-EKGUQGKlfm-ok2TqQtHhvbbuUGEIHxr#scrollTo=c05P9g5WjizZ is the Python code that created the models

- Tensboard.dev link for debugging https://tensorboard.dev/experiment/vMzgbGzzRo6xFV3aYcPovQ/#scalars