This is the original form of the data:

number category location

created_on

2018-03-27 20:48:00 WEB34041280c PFAST United States of America

2018-03-22 15:25:00 WEB34011829c SHAREPOINT United States of America

2018-03-22 20:39:00 AMR34013866c SHAREPOINT United States of America

2018-03-26 21:50:00 WEB34032848c GRAPHPAD PRISM United States of America

2018-04-01 13:27:00 AMR34063922c EXPENSE United States of America

... ... ... ...

2018-08-12 12:11:00 MEX29239028i NETWORK PRINTER Republic of Colombia

2018-08-17 12:15:00 MEX29266828i NETWORK PRINTER Republic of Colombia

2018-08-30 14:08:00 MEX29331773i NETWORK PRINTER Republic of Colombia

2018-09-05 13:53:00 MEX29358175i NETWORK PRINTER Republic of Colombia

2018-09-11 21:44:00 MEX29388987i LOCAL PRINTER Argentine Republic

480616 rows × 3 columns

I've grouped it into 4-Hourly buckets to create a count of tickets based on category of tickets and the location for which they occur

grouped = df.groupby(['location', 'category', pd.Grouper(freq='4H')])['number'].count()

converting the Series object to dataframe:

grouped_df = grouped.to_frame()

number

location category created_on

Arab Republic of Egypt AC ADAPTER 2018-04-03 12:00:00 1

2018-04-29 08:00:00 2

2018-05-27 08:00:00 2

2018-05-30 08:00:00 2

2018-06-10 08:00:00 1

... ... ... ...

United States of America YAMMER 2018-07-25 16:00:00 1

2018-07-31 12:00:00 1

2018-08-03 16:00:00 1

2018-09-11 20:00:00 1

2018-09-21 12:00:00 1

245659 rows × 1 columns

In order to check for spike in ticket count (and predict /forecast the same) I have considered the counts being > zscore

import scipy

from scipy.stats import zscore

grouped_outl_df = grouped_df.loc[grouped_df.groupby('location')['number'].transform(zscore) > 1]

number

location category created_on

Arab Republic of Egypt ACCESS 2018-06-25 08:00:00 6

ACCOUNT 2018-04-24 12:00:00 3

2018-05-31 12:00:00 3

2018-06-27 08:00:00 3

2018-06-28 08:00:00 4

... ... ... ...

United States of America WINDOWS 10 2018-09-25 16:00:00 15

2018-09-25 20:00:00 8

2018-09-26 12:00:00 11

2018-09-26 16:00:00 11

2018-09-27 16:00:00 11

19331 rows × 1 columns

1) My first issue begins now, I'm unable to plot the data using pivot techniques.

also when trying to use ARIMA model as shown below

from statsmodels.tsa.stattools import adfuller

def test_stationarity(timeseries):

#perform dickey fuller test

print("Results of dickey fuller test")

adft = adfuller(timeseries['number'],autolag='AIC')

#output for dft will give us without defining what the values are.

#hence we manually write what values does it explain using a for loop

output = pd.Series(adft[0:4],index=['Test Statistics','p-value','No. of lags used','Number of

observations used'])

for key,values in adft[4].items():

output['critical value (%s)'%key] = values

print(output)

test_stationarity(grouped_outl_df)

Results of dickey fuller test

Test Statistics -8.530215e+00

p-value 1.042587e-13

No. of lags used 4.200000e+01

Number of observations used 1.928800e+04

critical value (1%) -3.430689e+00

critical value (5%) -2.861690e+00

critical value (10%) -2.566850e+00

dtype: float64

From the p-value above its evident that our time series is Stationary

2) My 2nd issue begins when I'm unable to find the parameters of the ARIMA model (p, d, q) using the ACF and PACF plots

from scipy.optimize import minimize

import statsmodels.tsa.api as smt

import statsmodels.api as sm

from tqdm import tqdm_notebook

from itertools import product

def tsplot(y, lags=None, figsize=(12, 7), syle='bmh'):

if not isinstance(y, pd.Series):

y = pd.Series(y)

with plt.style.context(style='bmh'):

fig = plt.figure(figsize=figsize)

layout = (2,2)

ts_ax = plt.subplot2grid(layout, (0,0), colspan=2)

acf_ax = plt.subplot2grid(layout, (1,0))

pacf_ax = plt.subplot2grid(layout, (1,1))

y.plot(ax=ts_ax)

p_value = sm.tsa.stattools.adfuller(y)[1]

ts_ax.set_title('Time Series Analysis Plots\n Dickey-Fuller: p={0:.5f}'.format(p_value))

smt.graphics.plot_acf(y, lags=lags, ax=acf_ax)

smt.graphics.plot_pacf(y, lags=lags, ax=pacf_ax)

plt.tight_layout()

tsplot(grouped_outl_df.number, lags=10)

from the above plots I'm unable to decide what values I need to choose for ARIMA parameters p, d, q.

from statsmodels.tsa.arima_model import ARIMA

model = ARIMA(grouped_outl_df, order=(10,0,1))

result_AR = model.fit(disp = 0)

grouped_outl_df.number = grouped_outl_df.number.astype('float64')

result_AR.fittedvalues = result_AR.fittedvalues.astype('float64')

#plt.plot(grouped_outl_df)

#plt.plot(result_AR.fittedvalues, color='red')

#plt.title("sum of squares of residuals")

print('RSS : %f' %sum((result_AR.fittedvalues - grouped_outl_df["number"])**2))

I'm trying to use varies random values of p, d, q in order to minimize RSS value but I'm unable to do so and its time consuming and like a hit and trial method which I don't want to do

after minimizing the RSS value above I'm trying to forecast future datapoints, I need to forecast the ticket spike for the next 48 hours (2 days)

3) my third issue is that I'm not sure what values should I supply to the plot_predict and forecast functions in order to get a proper interpret-able plot to indicate the ticket count and the category and location where the next ticket spike will be.

result_AR.plot_predict(1,10)

x=result_AR.forecast(steps=10)

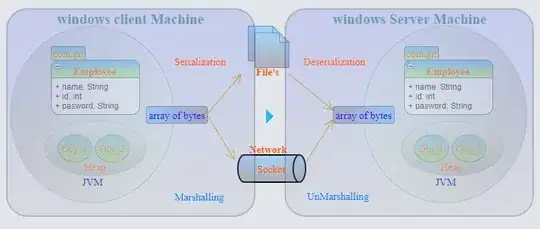

I get a plot something like this:

whereas I want a plot something like this:

the above plot is from another example though.

I will be very glad if someone can help me out with the issues that I'm facing.