I am trying to write a custom loss in Tensorflow v2, for simplicity let's say that I'm using Mean Squared Error loss as follows,

loss_object = tf.keras.losses.MeanSquaredError()

def loss(model, x, y, training):

# training=training is needed only if there are layers with different

# behavior during training versus inference (e.g. Dropout).

y_ = model(x, training=training)

return loss_object(y_true=y, y_pred=y_)

Now I know that Tensorflow does automatic differentiation.

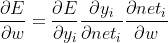

But I want to specify my custom gradient, in the BackPropagation algorithm, if we use MSE, we have to do the following

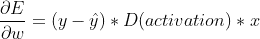

Is it possible in Keras to replace  with

with  where

where p is a tensor that is passed during training before applying gradients.