The Scientific Question:

I have lots of 3D volumes all with a cylinder in them orientated with the cylinder 'upright' on the z axis. The volumes containing the cylinder are incredibly noisy, like super noisy you can't see the cylinder in them as a human. If I average together 1000s of these volumes I can see the cylinder. Each volume contains a copy of the cylinder but in a few cases the cylinder may not be orientated correctly so I want a way of figuring this out.

The Solution I have come up with:

I have taken the averaged volume and projected it down the z and x axis (just projecting the numpy array) so that I get a nice circle in one direction and a rectangle in the other. I then take each 3D volume and project every single one down the Z axis. The SNR is still so bad that I cannot see a circle but if I average the 2D slices I can begin to see a circle after averaging a few hundred and it is easy to see after the first 1000 are averaged. To calculate a score of how each volume I figured calculating the MSE of the 3D volumes projected down z against three other arrays, the first would be the average projected down Z, then the average projected down y or x, and finally an array with a normal distribution of noise in it.

Currently I have the following where RawParticle is the 3D data and Ave is the average:

def normalise(array):

min = np.amin(array)

max = np.amax(array)

normarray = (array - min) / (max - min)

return normarray

def Noise(mag):

NoiseArray = np.random.normal(0, mag, size=(200,200,200))

return NoiseArray

#3D volume (normally use a for loop to iterate through al particles but for this example just showing one)

RawParticleProjected = np.sum(RawParticle, 0)

RawParticleProjectedNorm = normalise(RawParticleProjected)

#Average

AveProjected = np.sum(Ave, 0)

AveProjectedNorm = normalise(AveProjected)

#Noise Array

NoiseArray = Noise(0.5)

NoiseNorm = normalise(NoiseArray)

#Mean squared error

MSE = (np.square(np.subtract(RawParticleProjectedNorm, AveProjectedNorm))).mean()

I then repeat this with the Ave summed down axis 1 and then again compared the Raw particle to the Noise array.

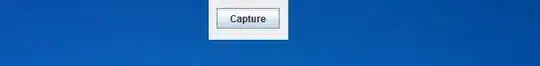

However my output from this gives highest MSE when I am comparing the projections that should both be circles as shown below:

My understanding of MSE is that the other two populations should have high MSE and my populations that agree should have low MSE. Perhaps my data is too noisy for this type of analysis? but if that is true then I don't really know how to do what I am doing.

If anyone could glance at my code or enlighten my understanding of MSE I would be super appreciative.

Thank you for taking the time to look and read.