Problem Statement

I've read a partitioned CSV file into a Spark Dataframe.

In order to leverage the improvements of Delta Tables I'm trying to simply export it as Delta in a directory inside an Azure Data Lake Storage Gen2. I'm using the code below in a Databricks notebook:

%scala

df_nyc_taxi.write.partitionBy("year", "month").format("delta").save("/mnt/delta/")

The whole dataframe has around 160 GB.

Hardware Specs

I'm running this code using a cluster with 12 Cores and 42 GB of RAM.

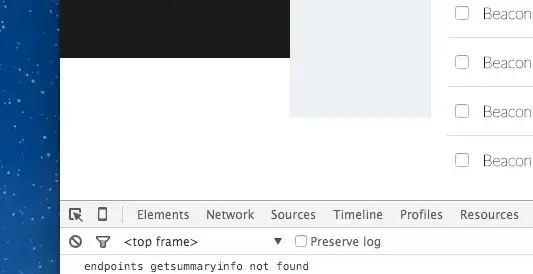

However looks like the whole writing process is being handled by Spark/Databricks sequentially, e.g. non-parallel fashion:

The DAG Visualization looks like the following:

All in all looks like this will take 1-2 hours to execute.

Questions

- Is there a way to actually make Spark write to different partitions in parallel?

- Could it be that the problem is that I'm trying to write the delta table directly to the Azure Data Lake Storage?