I’ve tried your suggestion. It does not work reliably and there are memory access artifacts.

First the code:

input thread:

cudaStream_t m_stream;

cudaEvent_t m_streamEvent;

cudaStreamCreateWithFlags(&m_stream, cudaStreamNonBlocking);

cudaEventCreate(&m_streamEvent);

int bytesPerPixel = 2;

int bytes = width * height * bytesPerPixel;

while(!stop)

{

// Read from file to m_imageData

// Next buufer is pinned memory allocated with af::pinned

nextBuffer = getWriteBuffer();

cudaMemcpyAsync(nextBuffer, m_imageData.data() + m_imageOffset, bytes, cudaMemcpyHostToDevice, m_stream);

cudaEventRecord(m_streamEvent, m_stream);

cudaStreamWaitEvent(m_stream, m_streamEvent, 0);

m_imageOffset = (m_imageOffset + bytes) % m_imageData.size();

}

Processor thread:

tile x frames (batch) from ring buffer and push the result to a queue

Output thread:

cudaStream_t m_stream;

cudaEvent_t m_streamEvent;

cudaStreamCreateWithFlags(&m_stream, cudaStreamNonBlocking);

cudaEventCreate(&m_streamEvent);

int rgbaBufferSize = width * 4;

auto m_rgbaPinnedBuffer = af::pinned<quint8>(rgbaBufferSize);

while(!stop)

{

rgba = m_queue.dequeue();

rgbaAfBuffer = rgba.device<quint8>();

cudaMemcpyAsync(m_rgbaPinnedBuffer, rgbaAfBuffer, rgbaBufferSize, cudaMemcpyDeviceToHost, m_stream);

cudaEventRecord(m_streamEvent, m_stream);

cudaStreamWaitEvent(m_stream, m_streamEvent, 0);

rgba.unlock();

// Do something with m_rgbaPinnedBuffer

}

When launched with NVidia's profiler, I can see the AF stream, I can see the output thread stream with memcpy outs. I cannot see the input thread stream with memcpy in. I don't know why, although the stream and event creation are reported successful.

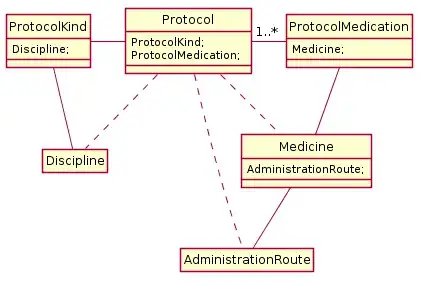

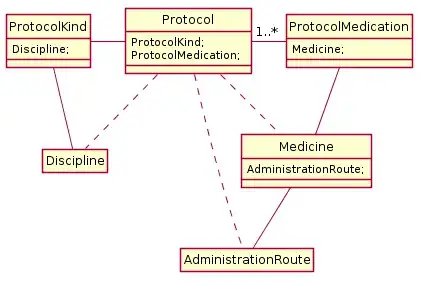

When using af::host, there's is no memory access issue, and I can see the cudaMemcpyAsync happening in the default stream. The ouput RGBA looks like this

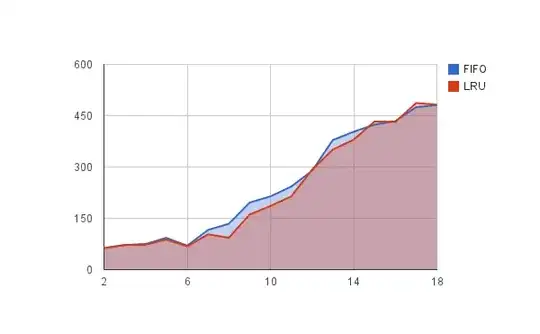

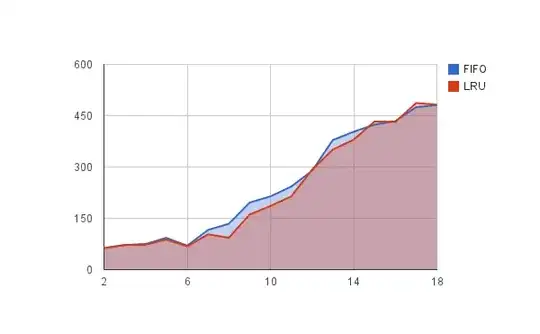

When using cudaMemcpyAsync I can see the cudaMemcpyAsync in the stream timeline but sometimes memory is repeated. It happens more when I increase the batch size or move other application windows fast stealing GPU time. See the output RGBA

Have you bumped into this kind of issue?