I'm using puckle's airflow docker (github link) with docker-compose-LocalExecutor. The project is deployed through CI/CD on EC2 instance so my airflow doesn't run on a persistent server. (Every push on master it gets launched afresh). I know i'm losing some great features but in my setup everything is configured by bash script and/or enviroment variables. My setup is similiar to this answer setup: Similar setup answer

I'm running on version 1.10.6, so the old method of adding config/__init__.py and config/log_class.py is not needed anymore.

Changes I made on the original repository code:

I added some enviroment variables and changed the build mode on

docker-compose -f docker-compose-LocalExecutorto write/save logs on S3 and build from local Dockerfile:webserver: build: . environment: - AIRFLOW__CORE__REMOTE_LOGGING=True - AIRFLOW__CORE__REMOTE_LOG_CONN_ID=aws_default - AIRFLOW__CORE__REMOTE_BASE_LOG_FOLDER=s3://path/to/logs - AIRFLOW_CONN_AWS_DEFAULT=s3://key:passwordI changed

Dockerfileon line 59 to install s3 plugin as showed below:&& pip install apache-airflow[s3,crypto,celery,password,postgres,hive,jdbc,mysql,ssh${AIRFLOW_DEPS:+,}${AIRFLOW_DEPS}]==${AIRFLOW_VERSION} \

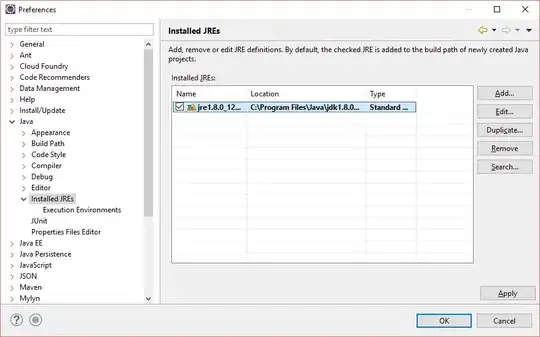

Those configurations works fine, the logs are written and read successfully from S3 as showed below:

My problem is:

If I run docker-compose down and docker-compose up the UI appear as the dags have never run before (The UI won't load the dag's remote logs):