It is very difficult to help since we don't really know which parameters you can change, like can you keep your camera fixed? Will it always be just about tubes? What about tubes colors?

Nevertheless, I think what you are looking for is a framework for image registration and I propose you to use SimpleElastix. It is mainly used for medical images so you might have to get familiar with the library SimpleITK. What's interesting is that you have a lot of parameters to control the registration. I think that you will have to look into the documentation to find out how to control a specific image frequency, the one that create the waves and deform the images. Hereafter I did not configured it to have enough local distortion, you'll have to find the best trade-off, but I think it should be flexible enough.

Anyway, you can get such result with the following code, I don't know if it helps, I hope so:

import cv2

import numpy as np

import matplotlib.pyplot as plt

import SimpleITK as sitk

fixedImage = sitk.ReadImage('1.jpg', sitk.sitkFloat32)

movingImage = sitk.ReadImage('2.jpg', sitk.sitkFloat32)

elastixImageFilter = sitk.ElastixImageFilter()

affine_registration_parameters = sitk.GetDefaultParameterMap('affine')

affine_registration_parameters["NumberOfResolutions"] = ['6']

affine_registration_parameters["WriteResultImage"] = ['false']

affine_registration_parameters["MaximumNumberOfSamplingAttempts"] = ['4']

parameterMapVector = sitk.VectorOfParameterMap()

parameterMapVector.append(affine_registration_parameters)

parameterMapVector.append(sitk.GetDefaultParameterMap("bspline"))

elastixImageFilter.SetFixedImage(fixedImage)

elastixImageFilter.SetMovingImage(movingImage)

elastixImageFilter.SetParameterMap(parameterMapVector)

elastixImageFilter.Execute()

registeredImage = elastixImageFilter.GetResultImage()

transformParameterMap = elastixImageFilter.GetTransformParameterMap()

resultImage = sitk.Subtract(registeredImage, fixedImage)

resultImageNp = np.sqrt(sitk.GetArrayFromImage(resultImage) ** 2)

cv2.imwrite('gray_1.png', sitk.GetArrayFromImage(fixedImage))

cv2.imwrite('gray_2.png', sitk.GetArrayFromImage(movingImage))

cv2.imwrite('gray_2r.png', sitk.GetArrayFromImage(registeredImage))

cv2.imwrite('gray_diff.png', resultImageNp)

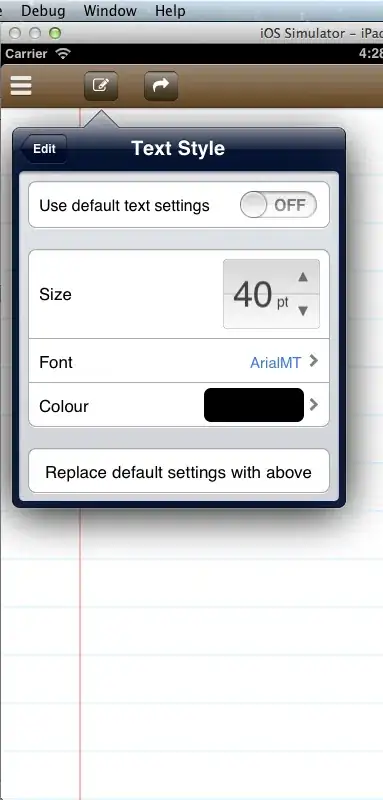

Your first image resized to 256x256:

Your second image:

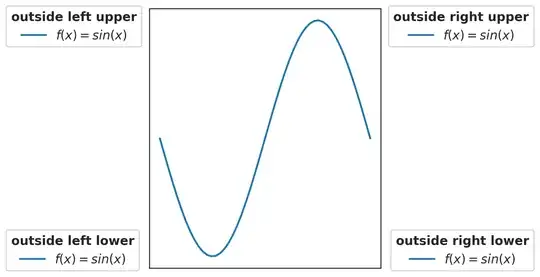

Your second image registered with the first one:

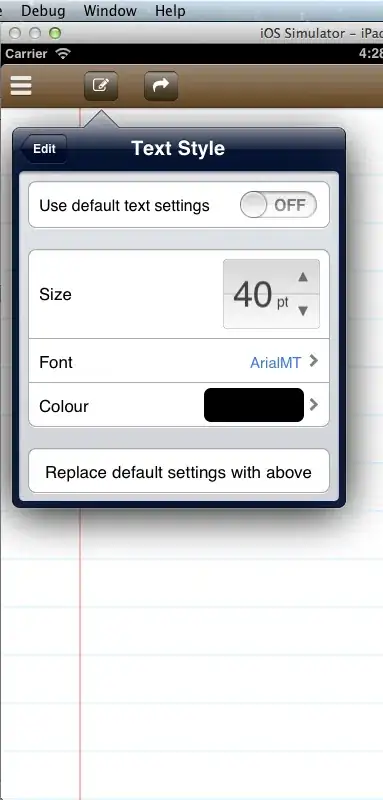

Here is the difference between the first and second image which could show what's different: