I have a Google Home Mini and I'm trying to use it as a speech-to-text device. The way I intend to do so is by having the device listening to what is said and publishing that input to an MQTT broker in order to my application to listen to it.

I have found this, that returns the input as text, but all it gives me is the certainty I can get this data. I have little to no clue on how to make it publish this data as an MQTT message.

Also found this, but can't make it work, because it states "There’s a very easy way to recognize custom phrases in Google Assistant,[...] I won’t cover it here". And even the Google's instructions (open "Create an Applet") seems to be out-dated in relation to IFTTT, because the steps simply aren't followable in IFTTT's interface.

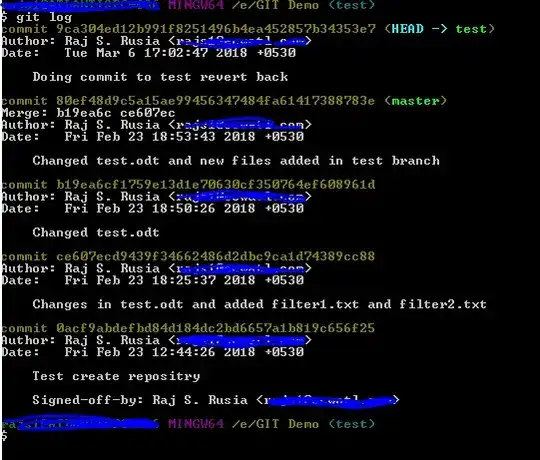

Here is a quick sketch of the architecture:

There're 5 arrows. The first one is, obviously, a physical process. Arrows "Audio" and "Text" are automatically done by the hardware. The right "MQTT Message" is working already. So what I wanted help with is the "MQTT Message" arrow from "Google Home" to "MQTT Broker".

Thanks in advance.