Actually, without using shutil, I can compress files in Databricks dbfs to a zip file as a blob of Azure Blob Storage which had been mounted to dbfs.

Here is my sample code using Python standard libraries os and zipfile.

# Mount a container of Azure Blob Storage to dbfs

storage_account_name='<your storage account name>'

storage_account_access_key='<your storage account key>'

container_name = '<your container name>'

dbutils.fs.mount(

source = "wasbs://"+container_name+"@"+storage_account_name+".blob.core.windows.net",

mount_point = "/mnt/<a mount directory name under /mnt, such as `test`>",

extra_configs = {"fs.azure.account.key."+storage_account_name+".blob.core.windows.net":storage_account_access_key})

# List all files which need to be compressed

import os

modelPath = '/dbfs/mnt/databricks/Models/predictBaseTerm/noNormalizationCode/2020-01-10-13-43/9_0.8147903598547376'

filenames = [os.path.join(root, name) for root, dirs, files in os.walk(top=modelPath , topdown=False) for name in files]

# print(filenames)

# Directly zip files to Azure Blob Storage as a blob

# zipPath is the absoluted path of the compressed file on the mount point, such as `/dbfs/mnt/test/demo.zip`

zipPath = '/dbfs/mnt/<a mount directory name under /mnt, such as `test`>/demo.zip'

import zipfile

with zipfile.ZipFile(zipPath, 'w') as myzip:

for filename in filenames:

# print(filename)

myzip.write(filename)

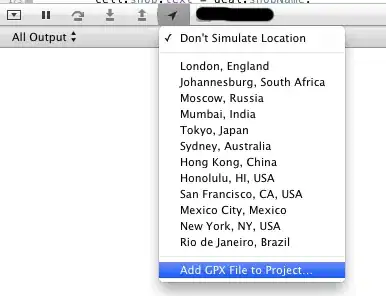

I tried to mount my test container to dbfs and run my sample code, then I got the demo.zip file which contains all files in my test container, as the figure below.