Your question and comment are contradictory: Keep everything (significantly) brighter/darker than the mean (+/- constant) of the neighbourhood (question) vs. keep everything within mean +/- constant (comment). I assume the first one to be the correct, and I'll try to give an answer.

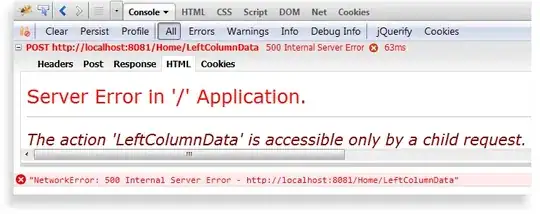

Using cv2.adaptiveThreshold is certainly useful; parameterization might be tricky, especially given the example image. First, let's have a look at the output:

We see, that the intensity value range in the given image is small. The upper-halfs of the third and sixth' squares don't really differ from their neighbourhood. It's quite unlikely to find a proper difference there. The upper-halfs of squares #8 and #12 (or also the lower-half of square #10) are more likely to be found.

Top row now shows some more "global" parameters (blocksize = 151, c = 25), bottom row more "local" parameters (blocksize = 51, c = 5). Middle column is everything darker than the neighbourhood (with respect to the paramters), right column is everything brighter than the neighbourhood. We see, in the more "global" case, we get the proper upper-halfs, but there are mostly no "significant" darker areas. Looking, at the more "local" case, we see some darker areas, but we won't find the complete upper-/lower-halfs in question. That's just because how the different triangles are arranged.

On the technical side: You need two calls of cv2.adaptiveThreshold, one using the cv2.THRESH_BINARY_INV mode to find everything darker and one using the cv2.THRESH_BINARY mode to find everything brighter. Also, you have to provide c or -c for the two different cases.

Here's the full code:

import cv2

from matplotlib import pyplot as plt

from skimage import io # Only needed for web grabbing images

plt.figure(1, figsize=(15, 10))

img = cv2.cvtColor(io.imread('https://i.stack.imgur.com/dA1Vt.png'), cv2.COLOR_RGB2GRAY)

plt.subplot(2, 3, 1), plt.imshow(img, cmap='gray'), plt.colorbar()

# More "global" parameters

bs = 151

c = 25

img_le = cv2.adaptiveThreshold(img, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY_INV, bs, c)

img_gt = cv2.adaptiveThreshold(img, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, bs, -c)

plt.subplot(2, 3, 2), plt.imshow(img_le, cmap='gray')

plt.subplot(2, 3, 3), plt.imshow(img_gt, cmap='gray')

# More "local" parameters

bs = 51

c = 5

img_le = cv2.adaptiveThreshold(img, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY_INV, bs, c)

img_gt = cv2.adaptiveThreshold(img, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, bs, -c)

plt.subplot(2, 3, 5), plt.imshow(img_le, cmap='gray')

plt.subplot(2, 3, 6), plt.imshow(img_gt, cmap='gray')

plt.tight_layout()

plt.show()

Hope that helps – somehow!

-----------------------

System information

-----------------------

Python: 3.8.1

Matplotlib: 3.2.0rc1

OpenCV: 4.1.2

-----------------------