I'm trying to get the dimensions of a displayed image to draw bounding boxes over the text I have recognized using apple's Vision framework. So I run the VNRecognizeTextRequest uppon the press of a button with this funcion

func readImage(image:NSImage, completionHandler:@escaping(([VNRecognizedText]?,Error?)->()), comp:@escaping((Double?,Error?)->())) {

var recognizedTexts = [VNRecognizedText]()

var rr = CGRect(x: 0, y: 0, width: image.size.width, height: image.size.height)

let requestHandler = VNImageRequestHandler(cgImage: image.cgImage(forProposedRect: &rr, context: nil, hints: nil)!

, options: [:])

let textRequest = VNRecognizeTextRequest { (request, error) in

guard let observations = request.results as? [VNRecognizedTextObservation] else { completionHandler(nil,error)

return

}

for currentObservation in observations {

let topCandidate = currentObservation.topCandidates(1)

if let recognizedText = topCandidate.first {

recognizedTexts.append(recognizedText)

}

}

completionHandler(recognizedTexts,nil)

}

textRequest.recognitionLevel = .accurate

textRequest.recognitionLanguages = ["es"]

textRequest.usesLanguageCorrection = true

textRequest.progressHandler = {(request, value, error) in

comp(value,nil)

}

try? requestHandler.perform([textRequest])

}

and compute the bounding boxes offsets using this struct and function

struct DisplayingRect:Identifiable {

var id = UUID()

var width:CGFloat = 0

var height:CGFloat = 0

var xAxis:CGFloat = 0

var yAxis:CGFloat = 0

init(width:CGFloat, height:CGFloat, xAxis:CGFloat, yAxis:CGFloat) {

self.width = width

self.height = height

self.xAxis = xAxis

self.yAxis = yAxis

}

}

func createBoundingBoxOffSet(recognizedTexts:[VNRecognizedText], image:NSImage) -> [DisplayingRect] {

var rects = [DisplayingRect]()

let imageSize = image.size

let imageTransform = CGAffineTransform.identity.scaledBy(x: imageSize.width, y: imageSize.height)

for obs in recognizedTexts {

let observationBounds = try? obs.boundingBox(for: obs.string.startIndex..<obs.string.endIndex)

let rectangle = observationBounds?.boundingBox.applying(imageTransform)

print("Rectange: \(rectangle!)")

let width = rectangle!.width

let height = rectangle!.height

let xAxis = rectangle!.origin.x - imageSize.width / 2 + rectangle!.width / 2

let yAxis = -(rectangle!.origin.y - imageSize.height / 2 + rectangle!.height / 2)

let rect = DisplayingRect(width: width, height: height, xAxis: xAxis, yAxis: yAxis)

rects.append(rect)

}

return(rects)

}

I place the rects using this code in the ContentView

ZStack{

Image(nsImage: self.img!)

.scaledToFit()

ForEach(self.rects) { rect in

Rectangle()

.fill(Color.init(.sRGB, red: 1, green: 0, blue: 0, opacity: 0.2))

.frame(width: rect.width, height: rect.height)

.offset(x: rect.xAxis, y: rect.yAxis)

}

}

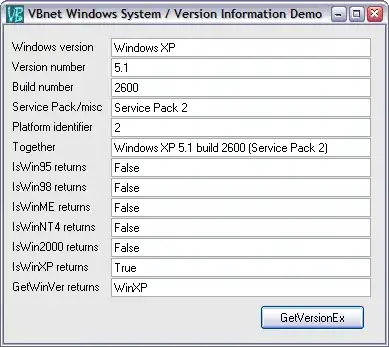

If I use the original's image dimensions I get these results

But if I add

Image(nsImage: self.img!)

.resizable()

.scaledToFit()

Is there a way to get the image dimensions and pass them and get the proper size of the image being displayed? I also need this because I can't show the whole image sometimes and need to scale it.

Thanks a lot